ACID transactions and implementation in a PostgreSQL Database

ACID transactions ensure that database transactions are reliable and consistent, even in the face of errors, power failures, or other problems. The acronym ACID stands for atomicity, consistency, isolation, and durability.

These are four critical properties of relational databases (such as PostgreSQL, MySQL, etc.), non-relations databases (such as MongoDB, etc.), and even graph databases (such GraphQL). In this article, you will learn about transactions and a detailed understanding of the four properties.

Table of Contents

- What is a transaction?

- Atomicity

- Isolation

- Consistency

- Durability

- Optimistic locking and eventual consistency

- Transaction implementation in Postgres database

- Summary

What is a Transaction?

Introduction

| Account_id | Balance |

|---|---|

| 1 | $500 |

| 2 | $7000 |

Send $400 from Account 1 to Account 2

BEGIN TRANSACTION

SELECT balance FROM account WHERE id= 1

balance > 400Transaction Lifespan

-

Transaction BEGIN: A transaction always starts with the

BEGINkeyword. This indicated to the database that it was about to start a transaction with multiple queries in it. -

Transaction COMMIT: Every time a transaction is written, it does not persist in the database unless it has been committed hence the transaction

COMMITwhich commits all the queries from when the queries begin and persist in the database. - Transaction ROLLBACK: This helps you undo changes or queries written. Let’s assume you got a crash in the middle of your transaction after writing ten thousand queries, then this becomes very important to ROLLBACK and retrieves those queries.

Nature of transaction

Usually, transactions are used to change and modify data, however, it is perfectly normal to have a read-only transaction example you want to generate a report and you want to get a consistent snapshot based on the time of transaction.

A: Atomicity

This is one of the four ACID properties that define database system management, and it is a very critical concept.

Atomicity ensures that all the operations within a transaction are treated as a single, indivisible unit of work. This means that if any operation within the transaction fails, the entire transaction will be rolled back and none of the changes will be committed to the database.

“All the queries in a transaction must succeed”, Think of an Atom, that basically, cannot be split. Atomicity is similar, it cannot be split, and all its queries behave as one unit of work and must succeed. If there is even one failure all queries must undergo a rollback. If the database went down prior to a commit of a transaction, all the successful queries in the transactions should roll back.

Using the example of an account transaction, where we want to send money from account 1 to account 2 by debiting account 1. If there is a database crash before updating/transferring the $400 to Account 1, for a badly implemented database system, we would just lose $400. This is really bad and brings about inconsistency, not only losing money but also you have no idea where the money went to.

Therefore, a lack of ATOMICITY leads to inconsistency, and we will talk about CONSISTENCY later on.

In an Atomic transaction, the transaction will roll back all queries if only one or more queries failed or the database fails.

C: Consistency

Consistency ensures that the transaction brings the database from one valid state to another. This means that the transaction must follow all the rules and constraints defined in the database schema, such as unique keys, foreign keys, and check constraints.

Consistency plays a lot of roles in both relational database and non-relational, Some system sacrifices consistency for speed, performance, and scalability. Consistency occurs in both the data (on disk) and in the reads (due to a different instance running).

Consistency in data

This represented the state that actually persisted in the data. This mainly involves enforcing.

- foreign keys referential integrity between two tables or documents an example can be seen when creating a user-like system, when a user(s) like an image or blog, the blog or image should persist the actual number of likes it has got from the user table.

- atomicity – data should persist across the database.

- Isolation – based on the isolation level which we will talk about later should return correct reads from two concurrent parallel tables.

Consistency in reads

For example, you have a database, you update a value X in the database, and now the next read must give you value X. That is what consistency in reading means. If a transaction committed a change will a new transaction immediately see the change? when this does not work we get an inconsistent database. This affects the system as a whole. Relational and NoSQL databases suffer from this.

I: Isolation

This is the third property that is very important among the ACID properties.

Isolation ensures that concurrent transactions do not interfere with each other. Each transaction is executed as if it is the only transaction in the system, even though other transactions may be executing simultaneously. It is the result of having transactions as a separate entity (isolation) from other concurrent transactions which may lead to reads phenomena and Isolation levels.

Read phenomena

Case study: Sales product database table showing quantity and price of each product.

| PID | QUANTITY | PRICE |

|---|---|---|

| Product 1 | 10 | $5 |

| Product 2 | 20 | $4 |

- Dirty reads: A dirty read occurs when your current transaction reads (method of getting/selecting data from the database) from some other transaction writes that have not fully been flushed/committed yet without the full conviction that the write can commit or maybe the database could crash, and changes could be rollback.

Let’s get a better understanding from our case study:

A dirty read in this case:

We begin a transaction to read data from the SALES table and another concurrent transaction was written to the same SALES Table as shown below:

- The second transaction (Second Concurrent transaction)

- Non-repeatable reads – Reads that involve reading a value twice in the same transaction. This involves different queries that yield the same value from the same transaction.

Again, with our case study above:

If the first transaction begins and there is a second transaction but this time the second transaction actually made it commit even before the first transaction finishes or made the second query to calculate the SUM(), so the value is actually 15 but the second query in transaction 1SELECT SUM(QUANTITY*PRICE) FROM SALES gives $155 when it should be $130.

This is not a dirty read problem but a non-repeatable read because during the second query, there was a second read under the hood through an aggregation function, and as a result, you got an inconsistent value, and fixing this is expensive. Some relational databases such as Postgres help solve this by creating a separate version for each instance while others do not.

-

Phantom reads – Reads that can not be read because they do not exist yet example is using the RANGE query to select activities between two values and you got a result, now if a new insertion is made that satisfies the RANGE condition, the read is going to give a different result than the original.

Take an example from our SALES Table case study:

We begin the first transaction to read data from the database.

First transaction:

BEGIN TRANSACTIONSELECT PID, QUANTITY*PRICE FROM SALES

and a parallel second transaction that is inserted into the sales table and commits that change.

Second transaction:

BEGIN TRANSACTIONINSERT INTO SALES VALUES ('Product 3', 10, 1);COMMIT TRANSACTION

If we do the second query to get the SUM () of quantity and price from the first transaction,

First transaction:

SELECT SUM(QUANTITY*PRICE) FROM SALES

we get $140 when it should be $130 because we read a committed value that showed up in our range query.

- Lost updates – These involve the attempt to read your written queries during a transaction; some transactions can lead to the loss of that writing by giving you a new different read.

Let’s analyze this with the sales table also.

Here we begin two transactions in parallel concurrently, says in the first transaction, we update the sales with id =1 to quantity increment of 10

First Transaction:

BEGIN TRANSACTIONUPDATE SALES SET QUANTITY = QUANTITY + 10 WHERE PID = 1;

Next, we also run a query in the second transaction to run an update on the same sales with id =1 and commit.

Second Transaction:

BEGIN TRANSACTIONUPDATE SALES SET QUANTITY = QUANTITY +5 WHERE PID =1;

COMMIT TRANSACTION

This second transaction has now overridden the first transaction and we lost the result from the first transaction when the second query runs to calculate the sum from the table

SELECT SUM(QUANTITY*PRICE) FROM SALESwe get $155 when it should be $180 because our update was overwritten by another transaction and as a result “lost” this can be solved by locking the row till a transaction is completed.

Isolation Levels for inflight transactions

It’s also a good idea to use the appropriate isolation level for your transactions. Different isolation levels provide different levels of isolation between transactions and choosing the right isolation level can help to improve the performance of your database.

In PostgreSQL for example, you can specify the isolation level of a transaction using the **SET TRANSACTION ISOLATION LEVEL** statement. For example, to set the isolation level to **READ COMMITTED**, you can use the following statement:

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;There are several isolation levels available, each with its own trade-offs in terms of performance and consistency. Here is a brief overview of the different isolation levels:

**READ COMMITTED**: This is the default isolation level in PostgreSQL. It provides a moderate level of isolation, ensuring that a transaction cannot read data that has not been committed by other transactions.**SERIALIZABLE**: This isolation level provides the highest level of isolation, ensuring that transactions are executed in a serialized order. This can improve consistency but can also impact performance.**REPEATABLE READ**: This isolation level ensures that a transaction will see the same data every time it reads from the database, but it may see changes made by other transactions that have not yet been committed.**READ UNCOMMITTED**: This isolation level provides the lowest level of isolation, allowing a transaction to read data that has not yet been committed by other transactions. This can improve performance but can also compromise consistency.**SNAPSHOTS**– Each query in a transaction only sees changes that have been committed up to the start of the transaction. It’s like a snapshot version of the database at that moment. This is guaranteed to get rid of any read phenomenon. When you start a transaction, you snapshot the version of that transaction and guarantee to always gets that version of your transaction read even if there is a change from another transaction.

Choosing the right isolation level depends on the specific requirements of your application and the trade-offs you are willing to make in terms of performance and consistency. In general, it’s a good idea to start with the default **READ COMMITTED** isolation level and adjust as needed based on your application’s needs.

Database implementation of isolation

Each DBMS implements the Isolation level differently.

- Pessimistic Approach – Row level locks, table locks, and page locks to avoid lost updates.

- Optimistic Approach – No locks, just track if things changed and fail the transaction if so

- Repeatable read “locks” the rows it reads but it could be expensive if you read a lot of rows. Postgres implements Repeated read as a snapshot. That is why you do not get phantom reads with postgres.

- Serializable is usually implemented with optimistic concurrency control.

D: Duracity

Durability ensures that the changes made by a committed transaction are permanent and will survive any subsequent failures. This is typically achieved by writing or persisting the changes to disk or other non-volatile storage. This involves a system that can recover all writes and see all changes after committed even when the system crashes or loss of power.

Durability is slow, as it writes directly to disk but some database writes to in-memory and creates snapshots. An example of such a database system is REDIS.

Durability techniques

- WAL – Write ahead log: Writing a lot of data to disk is expensive (Indexes, data, files, rows, etc.). That is why DBMs persist in a compressed version of the changes as WAL. Any changes go to disk first. When a crash happens, we can read all the WAL data and rebuild the state.

- Asynchronous snapshot: As we write we keep everything in the memory and asynchronously in the background we snapshot everything to disk at once.

- AOF – Append only file: This is similar to the WAL, keep track of the changes before it happens and then write everything to disk.

- Operating System (OS) cache – A write request in OS usually goes to the OS cache. When the writes go to the OS cache, an OS crash, or machine restart could lead to loss of data. Fsync OS command forces write to always go to disk, this command can be expensive and slow down commits.

Optimistic locking and eventual consistency

It’s also worth noting that ACID transactions are not the only way to ensure the reliability and consistency of a database. There are other approaches that can be used to achieve similar results, such as optimistic locking and eventual consistency.

-

Optimistic locking: a technique that allows multiple transactions to execute concurrently but verifies that the data has not been modified by other transactions before committing the changes. If the data has been modified, the transaction is rolled back, and the changes are discarded.

Optimistic locking can be a good choice in situations where the likelihood of conflicting updates is low, as it allows transactions to execute concurrently and improve performance.

- Eventual consistency: a design pattern that allows data to be temporarily inconsistent while it is being updated but ensures that the data will eventually become consistent. This can be achieved through the use of techniques such as eventual database updates and distributed transactions.

Eventual consistency can be a good choice in distributed systems where it is not always possible to guarantee immediate consistency, as it allows the system to continue operating while updates are being made.

Transactions Implementation in Postgres database

PostgreSQL is a popular open-source relational database management system that supports ACID transactions.

Using raw SQL

Let’s see an example of how you might implement ACID transactions in PostgreSQL using the **pg** library in Node.js:

const { Client } = require('pg');

async function updateUser(id, name) {

const client = new Client();

await client.connect();

try {

// Start a new transaction

await client.query('BEGIN');

// Update the user's name

await client.query(`UPDATE users SET name = $1 WHERE id = $2`, [name, id]);

// Commit the changes

await client.query('COMMIT');

} catch (err) {

// If an error occurs, roll back the changes

await client.query('ROLLBACK');

throw err;

} finally {

// Close the client connection

await client.end();

}

}This code uses the **pg** library to connect to a PostgreSQL database and update a user’s name within a transaction. If any errors occur during the transaction, the changes are rolled back using the **ROLLBACK** statement.

To start a transaction, the code uses the **BEGIN** statement as earlier mentioned above. This starts a new transaction and allows you to perform a series of database operations as a single unit of work.

The code then updates the user’s name using the **UPDATE** statement and passes the new name and the user’s ID as parameters.

Once the update is complete, the code uses the **COMMIT** statement to commit the changes and end the transaction. This will save the changes and make them permanent in the database.

If any errors occur during the transaction, the **catch** block is executed and the **ROLLBACK** statement is used to undo the changes and end the transaction.

Finally, the **finally** block closes the client connection using the **end()** method.

By using transactions and the ACID properties, you can ensure that your database operations are reliable and consistent, even in multi-user environments where multiple transactions may be occurring simultaneously.

Using an Object-Relational-Mapping (ORM).

It’s worth noting that in this example, the **pg** library is being used to execute raw SQL statements to manage the transactions. Some ORM (Object-Relational Mapping) libraries, such as Sequelize, also provide support for ACID transactions and allow you to manage transactions using a higher-level API.

For example, here is how you might implement the same functionality using Sequelize:

const { Sequelize, Model } = require('sequelize');

class User extends Model {}

const sequelize = new Sequelize('database', 'username', 'password', {

dialect: 'postgres'

});

User.init({

name: Sequelize.STRING

}, { sequelize, modelName: 'user' });

async function updateUser(id, name) {

// Start a new transaction

const transaction = await sequelize.transaction();

try {

// Update the user's name

await User.update({ name }, { where: { id } }, { transaction });

// Commit the changes

await transaction.commit();

} catch (err) {

// If an error occurs, roll back the changes

await transaction.rollback();

throw err;

}

}This code uses the **transaction()** method of the Sequelize instance to start a new transaction. The **update()** method is then used to update the user’s name, passing the transaction as an option.

To commit the changes, the code calls the **commit()** method on the transaction object. If any errors occur, the **catch** block is executed and the **rollback()** method is called to undo the changes.

Using Sequelize or another ORM can make it easier to manage transactions and implement the ACID properties in your application, as you don’t need to write raw SQL statements.

It’s important to note that while ACID transactions help to ensure the reliability and consistency of a database, they can also have a performance impact. Since transactions require the database to maintain a record of the changes made within the transaction, they can consume additional resources and slow down database operations.

To mitigate this performance impact, it’s generally recommended to keep transactions as short and focused as possible. This means avoiding long-running transactions or transactions that perform a large number of operations.

Summary

ACID transactions are a reliable and widely used technique for ensuring the consistency and reliability of a database. However, there are other approaches that may be more suitable in specific situations, such as optimistic locking and eventual consistency. The best approach will depend on the specific requirements and trade-offs of your application.

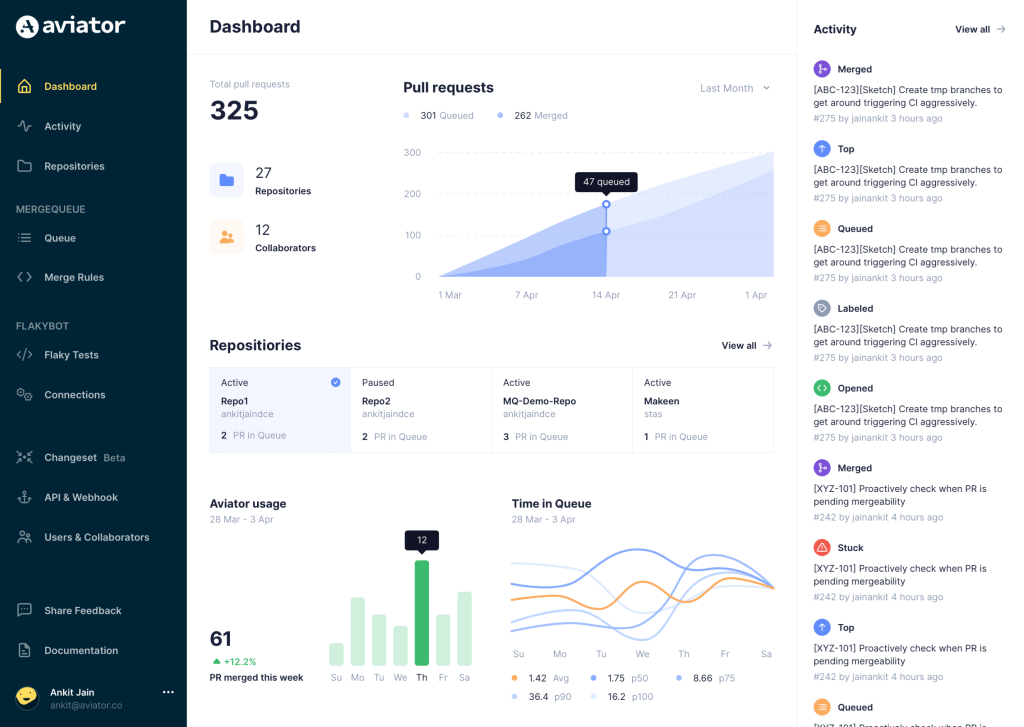

Aviator: Automate your cumbersome merge processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- FlakyBot – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.