What’s a bors, and why (don’t) you want it?

Bors-NG is a GitHub app that prevents merge skew: A problem where patches work at review time but don’t work when they’re actually merged because the main branch changed in the meantime.

The classic example is when one patch renames a function, while another patch adds a call to it by its old name and nobody notices because they’re reviewed separately. In a traditional, continuous testing system where tests are run only after the main branch gets updated, this means reverting the faulty patch.

But doing this at scale is annoying.

Deciding which patch is the bad one can be complicated, or even politically charged. And while a broken branch is set up as the default development head, it interferes with everyone else’s work when they rely on the test suite and it fails them.

Many major open source projects don’t have this problem, because their pull requests are merged by “bots” that merge the code, test it, then promote the already-tested merged code to the main branch. Bors-NG is an implementation of the sort of automation you find in the Rust project, the Kubernetes project, and many more.

The process is this: You test it first, then promote it, not the other way around. It’s worth automating because it’s tedious, not because it’s rocket science.

Should you even bother?

Many software projects produce a ton of value without ever implementing anything like bors. While some of them would certainly benefit, many of them really wouldn’t. Any tooling, whether it’s a merge bot, a linter, a scanner, or any automated test suite at all, is a liability. If it isn’t producing enough value to justify the costs, don’t use it.

Here’s some costs that come from using a merge bot like Bors-NG:

- If the bot gets hacked, it can push arbitrary code to the repository

- If the bot goes down, development grinds to a halt (or the bot gets bypassed)

- If the test suite doesn’t cover enough functionality, then the bot is pointless

- If the test suite takes more than a couple of hours, then you’ll need a more complex triage process to make sure patches don’t accumulate faster than they can be merged (batching helps, but if a PR actually does fail, it might take all day for bisecting to identify the one at fault)

The two root problems

But the particular problem with Bors-NG is mostly the result of two root problems:

- If your project is too small, and the operational overhead of running Bors-NG exceeds the value, then it might make sense to manually run the test suite. If this is the approach you go with, then the important part is to ensure that you don’t forget to run the test suite before release. Consider how

make distcheckin GNU projects combines the steps of running the test suite and producing a release tarball. I would, if I was using automake, combinemake distcheckwith a script that performs the upload, so performing a release without running the tests requires more effort than running them (a form of Poka-Yoke). - If your project is too big, and your test suite takes all day to run, it might make sense to do what Firefox does and have a human and/or AI “sheriff” build failures instead of bors-style automatic rejection. This requires you to have someone do that, but it’s usually more accurate than Bors-NG style bisecting, while being faster than a linear process like Homu, and cheaper than a concurrent process like Zuul. There’s still quite a bit of automation in this process, but it’s different from Bors-NG.

Determining if Bors-NG is right for you

Now that we got all that out of the way, here’s the top three pieces of advice for anyone incorporating Bors-NG (or any test suite) into a project, after identifying the project as a good fit:

- Consider having a reduced part of your test suite run when a PR is opened and reserve the entire battery of tests for the Bors-NG queue. It’ll save you a lot of compute time. You can also incorporate heuristics for identifying tests that are likely to be affected by a PR (only hard-and-fast rules should be used for skipping tests in the actual Bors-NG queue).

- Focus on making your test suite run fast. Not only will your queue empty quicker, but developers are more likely to run the test suite locally before submitting a PR if the tests run quickly, and batches will be smaller so bisecting will be faster. The improvements are highly non-linear when your test suite runs fast.

- Focus on making your test suite deterministic. Flaky tests that fail sometimes are very bad because they result in unproblematic PRs being rejected. Fuzz testing should be run outside of the Bors-NG queue.

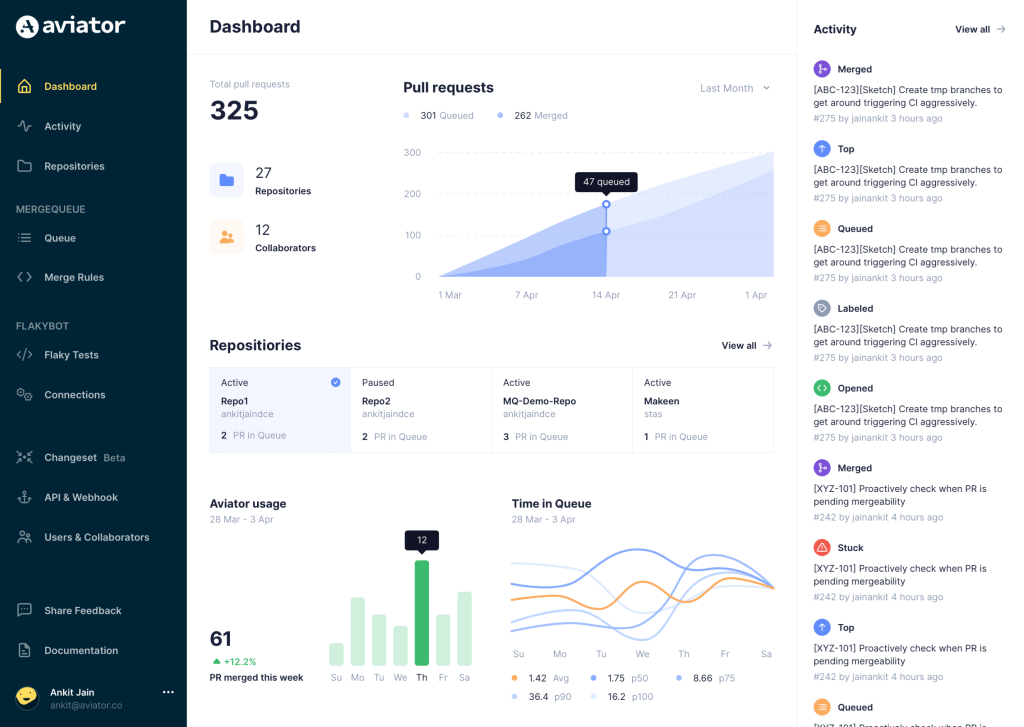

Aviator: Automate your cumbersome merge processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- FlakyBot – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.