How we built one of the most complex apps on top of GitHub

Aviator is a developer productivity platform that solves common scaling challenges faced by engineering teams as they grow their team size and code complexity. As you might expect, we are heavily integrated on top of GitHub and have developed a love/hate relationship with it.

Overview

Let me just start by saying that we love GitHub, we use it every day and can’t imagine living without it.

Here’s why:

- It has been the go-to place for most of the open-source projects in the last decade,

- a pretty decent review interface for collaboration,

- flexible integration for running custom CI/CD pipelines,

- very elaborate APIs covering a lot of customization capabilities,

- strong developer support – it’s very easy to find references and examples.

So then, why is our relationship love/hate? GitHub works really well for 90% of use cases, but when you start hitting edge cases or when you are building something at scale, things can get complicated very quickly.

Building the app

Getting started on building a new GitHub app was fairly simple. But we ran into a considerable amount of challenges as we scaled our app., Here, I’ll discuss these challenges and how we resolved them.

Missing API capabilities

Although the GitHub APIs are fairly elaborate, we have our wishlist of APIs that are missing from GitHub today.

- Rebase – there are ways to create a merge commit using the APIs and have even found ways to create a squash commit using GitHub APIs, there is no way to rebase a branch. As you can imagine, rebasing can be a complicated process and comes with its own challenges, but it’d be great if there was an API to trigger a rebase for a branch. Now, GitHub does support the “rebase and merge” method to merge a pull request, but not rebasing a branch itself without a PR.

So how do we support rebasing? Well, as our app evolved, we introduced capabilities to also use the git CLI tool natively. We perform a shallow fetch of references needed to execute a clean rebase and use git operations before pushing the new references back to remote.

This process can take somewhere from a few milliseconds to up to a few seconds depending on the size of the repository and the depth of the rebase.

- No fast forward – Sometimes when creating a new branch there are benefits of creating a completely fresh set of commits, particularly to make sure we don’t rerun CI on certain commits. git CLI by default performs a fast-forward merge, but you can pass a “–no-ff” flag to create a new merge commit:

git merge main –no-ff

But GitHub does not provide a no-ff option using their merge API. We had to build our own workaround using a temporary branch and the commit tree to generate a new commit SHA.

Network issues

Network issues come with working with any third-party API and that’s something we knew going in. There are various types of network issues such as connection errors, readtimeouts, ssl handshake failures, etc, but most of them can be handled in somewhat similar ways. For us, there are two main objectives when handling network issues:

- Event retries: Ensure that we don’t lose any event data. For instance, if we receive a webhook from GitHub for a label added, we first cache that information locally and then trigger a background task to handle the event. This way, if a network issue happens while handling the event, we can automatically retry that event.

- Eventual consistency: There are some actions that cannot be blindly retried.For instance, a merge PR action, or an action to open a new PR. If we get a network issue while making an API call, we cannot simply retry the logic as the actions may have changed the state of the system. For such write actions, we built a fallback logic to review the state of the action and ensure eventual consistency.

In addition, we also perform retries at the API level using an HTTP requests library.

Managing unknown/unexpected behaviors

This is where things can get really complicated. Sometimes GitHub may have unexpected or undocumented behavior. In such cases, many times we just have to learn by testing the behavior. Some examples:

- pull request Rest API has a `mergeable_state` property that is not documented. We found an equivalent GraphQL enum and tested various values. But still, this can sometimes return an unexpected value. For instance, it may return “blocked” even when some of the optional checks have failed and PR can be merged.

- merging PRs with `.github/workflows` files – it’s unclear when Github blocks an app from modifying a PR with files that include the GitHub workflows. Sometimes it throws an error and sometimes it doesn’t. We have also seen Github sometimes throwing an HTTP 500 error when this happens.

- Random HTTP 404s – occasionally we start receiving 404s for certain types of resources. For instance, sometimes a commit SHA associated with an active PR or even a commit SHA from the base branch will disappear from the GitHub database even though you can see it in the GitHub UI. Likewise, a 404 may even happen with feature branches, and references gone missing. In some cases, we have to introduce a fallback logic to handle actions using `git` CLI when this happens.

Rate limits

GitHub has very clear documentation on the rate limits granted for an app installation:

GitHub Apps making server-to-server requests use the installation’s minimum rate limit of 5,000 requests per hour. If an application is installed in an organization with more than 20 users, the application receives another 50 requests per hour for each user. Installations that have more than 20 repositories receive another 50 requests per hour for each repository. The maximum rate limit for an installation is 12,500 requests per hour.

GitHub also has best practices for managing rate limits. We have drastically reduced our API usage by relying heavily on webhooks. In addition, we also follow a lambda architecture to also fetch the state of active PRs periodically to ensure we can get eventual consistency even when we miss any webhook.

Secondary rate limits

GitHub also has a concept of secondary rate limits, that restrict burst usage of the APIs. These rate limits are harder to protect against as there are no defined metrics here. Using webhooks and not avoiding excessive polling are some practices that they recommend to avoid secondary rate limits.

In addition, we are now building a mechanism to avoid using API tokens in parallel and implementing an exponential backoff when rate limits are hit. We occasionally still do bump up against these rate limits, but GitHub doesn’t provide an explicit reason for when these are reached. Some of our logic on managing network issues also helps to handle these errors in such cases.

Race conditions

If one is building a scalable system dependent on a third-party data source, race conditions are somewhat inevitable. The most common issue we have seen with race conditions is with webhooks.

For instance, we may receive a webhook when a particular GitHub action is successful, and this webhook is then processed in a particular thread. In parallel, we also made an API call to GitHub to fetch the state of all CI runs in a separate thread where the state of this GitHub action may be pending.

One thing to note is that GitHub may return an old state of CI run (pending) through the API, even after we have received a successful completion in a webhook. This can cause the state to be captured incorrectly.

Thankfully we have built our system using lambda architecture that provides eventual consistency and the internal state of these conditions is self-healing. In a subsequent run of the CI validation, we will update the final state of the Github action.

Locks

We also heavily use various level locking to avoid inconsistencies and race conditions. These locks ensure that we can continue scaling horizontally with hundreds of threads to process data coherently. There are 3 main types of locks that we use:

- Repo-level locks – as the name suggests, these are somewhat global locks that we acquire to change the state of the entire repository. Some common examples are when constructing a draft PR while maintaining the PRs sequence, removing a PR from the queue, and changing the repo configuration.

- PR level locks – these are used to change the state of the PR, or any kind of data associated with a PR.

- Database level locks – we use these locks to ensure we can perform certain DB transactions atomically. For instance, querying an existing GitHub status before inserting a new one.

Using PyGithub

When we started building the app, we decided to use PyGithub as the choice of unofficial GitHub SDK that helped us build quickly. As we scaled we realized that many constructs of PyGithub are somewhat limiting and are now unwinding that dependence. Some limitations:

- PyGithub expects fetching specific objects (e.g. PullRequest) before you can take another action (post an issue comment on the PR).

- pagination can be tricky, as it does not let you customize the number of results to fetch per API request. Only has a single global page size.

- does not work well when using a webhook-based payload.

Over time, we expect that we will completely remove the reliance on PyGithub and build our own lightweight client SDK.

Refreshing credentials / handling bad credentials

We use GitHub app installation-based credentials that require us to generate a short-lived access token. We generate and cache the access tokens for 10 minutes. That means we also need to refresh access tokens pretty frequently. So we had to build a wrapper on every API request to ensure that the token is refreshed before making the API call. This can especially be complicated when using PyGithub.

GraphQL and Rest APIs

Although we started exclusively with using Rest APIs from Github, over time we have also started investing in GraphQL APIs as well. In some cases, GraphQL provides data that may not be available from Rest APIs, and sometimes making batch calls using GraphQL APIs can help save the rate limits. I do not expect that we will completely get rid of one type of APIs, using a mix of the two certainly provides a good balance. The main challenge with using both is interoperability – we have to convert data objects from one format to another.

Testing the integration

Testing an app that heavily uses third-party APIs can also be very complicated. For some of the core functionality we write some unit tests, but a vast majority of our logic is tested using end-to-end tests.

To write effective e2e tests, we have also built a testing library using GraphQL APIs that help us rapidly create and teardown objects in Github. If you are interested in using this test library give me a holler, we are happy to share this with anyone who has taken on the gigantic task of building a complex Github integration.

Looking ahead

These were some of our learnings from the last two years of building Aviator. I’m sure we will learn a lot more as we build the app to scale to millions of developers, but for now, we have a few more ideas to implement in the near future:

- Do more things natively – as we have learned that GitHub can be unpredictable at times, we will start relying less on the APIs and perform more actions natively using git CLI.

- Scaling APIs with caching – To further reduce the number of API calls we make to Github, we will also continue getting better at caching data that we receive from Github. This might mean storing temporary API results in memory or caching certain object properties in the database.

- Better rate limit management – We’ve consistently improved our rate limit usage as we scale to larger and larger teams. Another concept we are now exploring is to ration various API usages internally and assign priority. This way, when we get closer to the rate limits, we can cut off the low-priority actions without impacting the high-priority usage.

Better error handling – One key to providing a better experience to developers is by providing better debug information. It’s unlikely that we can process every possible error reported by GitHub, but we can build a robust error management service to pass through an actionable debug message back to the user.

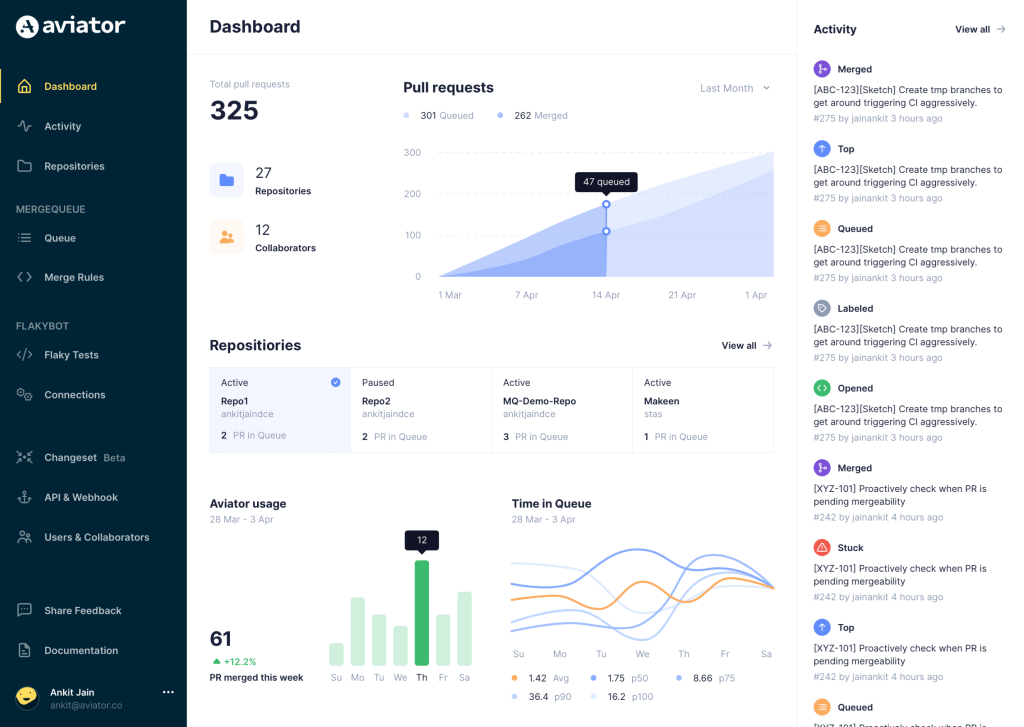

Aviator: Automate your cumbersome merge processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- FlakyBot – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.