LLM Agents for Code Migration: A Real-World Case Study

LLM agents are changing how developers handle code migration turning tedious, error-prone refactors into intelligent, semi-automated workflows. In this case study, we show how agents migrated a Java codebase to TypeScript by analyzing code, planning steps, and executing changes with architectural awareness and CI-backed validation.

TL;DR

- LLM agents are autonomous systems that don’t just generate text—they plan, reason, and act to handle multi-step tasks like code migration and refactoring, combining tools, memory, and feedback loops.

- In code migration, they break down complex rewrites (e.g., Java → TypeScript) into smaller steps, understand dependencies, and apply consistent transformations, reducing manual effort and regressions.

- The workflow typically involves multiple specialized agents (reader, planner, migrator), uses vector databases for context memory, and runs CI/CD checks (e.g., GitHub Actions, ESLint, Prettier) to validate output.

- Fallback and recovery mechanisms (e.g., using secondary LLMs, manual intervention triggers, regression testing) ensure resilience when migrations hit errors or unexpected patterns.

- The result: faster, scalable migrations with fewer errors helping developers focus on high-level review instead of repetitive edits, while keeping migrations testable and consistent across large codebases.

What Are LLM Agents?

LLM agents work as autonomous systems that use large language models to perform goal-driven tasks. These agents don’t just generate text, they plan actions, reason through steps, and make decisions across complex workflows. Developers can use them to orchestrate multi-step tasks like code refactoring, documentation updates, or test generation.

Unlike basic LLM prompts, agents combine memory, tools, and feedback loops. They interact with a programming environment, evaluate results, and decide what to do next. Some agents retrieve documentation. Others search a codebase or apply edits based on structured goals.

In code migration projects, agents can break down a large change into smaller, manageable operations. They analyze code, suggest replacements, and execute transformations with contextual awareness.

The Challenge of Code Migration

Migrating code isn’t just about syntax updates. Most migrations involve deeper changes to architecture, logic, and dependencies.

Teams often move from one language to another, like Java to TypeScript, or modernize code from older versions, such as Python 2 to Python 3. Each change introduces risks. Legacy projects often contain tightly coupled modules, undocumented behaviors, or outdated third-party libraries. These create blockers that slow migration work.

Manual refactoring takes a toll on developers. Engineers must switch contexts constantly, interpret unfamiliar logic, and avoid breaking changes. Regressions slip in. Tests break. Progress stalls.

LLM agents offer a new way forward. They don’t replace developers, but they assist them. Agents can scan a codebase, propose updates, and carry out repetitive edits. They help teams reduce the workload, catch edge cases, and accelerate migrations with fewer regressions.

Why Use LLM Agents for Code Migration and Code Refactoring?

LLM agents bring significant value to AI code migration workflows, incorporating code refactoring best practices by blending automation with contextual reasoning. Traditional migration often involves tedious manual edits, repetitive patterns, and high risk of introducing regressions. Agents help reduce this burden by operating like decision-making assistants that understand, plan, and act across your entire codebase.

Here’s what makes LLM agents effective:

- Multi-file Understanding

Agents don’t treat files as isolated units. They read across modules, understand how components interact, and maintain the big picture while making changes. - Consistent Refactoring Patterns

Once an agent identifies a transformation, say, changing a callback to a promise, it applies that pattern uniformly across the project, avoiding inconsistencies that human edits often introduce. - Built-in Memory and Reasoning

Agents remember what they’ve changed. If they rename a function or migrate a pattern, they carry that logic forward. This prevents duplicated effort and ensures architectural decisions stay consistent. - Scalable Automation

Whether it’s a 10-file refactor or a 1,000-file migration, agents maintain context. They can be paused, resumed, or re-run without forgetting what’s been done or why. - Less Cognitive Overhead for Devs

Developers can focus on code review and higher-level decisions, while agents handle repetitive edits, regressions, and search-replace logic with contextual awareness.

Tools & Frameworks Used

This case study used a modular stack to build, run, and validate the LLM agent’s migration workflow. Each component had a clear role, , ranging from orchestration and memory handling to CI validation and static analysis.

Reasoning and Orchestration

- LangChain

Acts as the control layer for agent workflows. It lets you define how the agent reasons, remembers, and executes tasks step by step. It supports tool integration (e.g., code search, file editing) and agent memory. - GPT-4 / Claude

These language models drive the core reasoning. They interpret source code, infer migration rules, and generate modified code with architectural context. GPT-4 was used for most multi-step reasoning; Claude served as a fallback or reviewer model in some cases.

Memory and Retrieval

- Vector Database (e.g., Chroma)

Helps the agent remember previously seen files, patterns, and decisions. Code chunks are embedded into vectors, which the agent uses to retrieve relevant context before acting. This avoids re-processing and enhances consistency.

Runtime and Validation

- Docker

Ensures migrations run in an isolated and reproducible environment. Every agent execution spins up a container that matches the project’s runtime stack (e.g., Node.js, Python). - GitHub Actions

Automatically validates changes made by the agent. Unit tests, integration tests, and linters run after each commit to catch regressions early and maintain trust in the automation.

Code Quality Enforcement

- ESLint + Prettier

Run post-migration to enforce style and detect syntax issues. ESLint flags potential errors or deprecated usage, while Prettier ensures code formatting aligns with team standards.

Real-World Case Study Setup: Java to TypeScript Migration

We designed a multi-agent system using LangChain to automate the migration from Java to TypeScript. The setup involved three specialized agents, each performing distinct roles in the workflow.

1. File Reader Agent

This agent scanned the Java project and parsed files into structured code representations. It indexed class definitions, annotations, methods, and dependencies using embeddings stored in a Vector DB (e.g., Chroma).

Input – Java Service Class:

@Service

public class UserService {

private final UserRepository userRepository;

public UserService(UserRepository userRepository) {

this.userRepository = userRepository;

}

public User getUser(Long id) {

return userRepository.findById(id).orElse(null);

}

}Output – Structured Metadata:

{

"class": "UserService",

"annotations": ["@Service"],

"dependencies": ["UserRepository"],

"methods": [

{

"name": "getUser",

"returns": "User",

"params": [{"name": "id", "type": "Long"}],

"calls": ["userRepository.findById"]

}

]

}2. Planner Agent

The Planner Agent used this indexed metadata to build a migration roadmap. It broke down the system into logical modules (DAO, services, controllers) and generated a dependency graph.

Planner Output:

{

"taskQueue": [

"migrate UserRepository.java",

"migrate UserService.java",

"migrate UserController.java"

],

"dependencies": {

"UserController": ["UserService"],

"UserService": ["UserRepository"]

}

}This setup ensured we migrated leaf modules first and resolved dependencies layer-by-layer.

3. Migrator Agent

The Migrator Agent performed the actual translation of Java code into TypeScript using code synthesis, retrieval-augmented generation (RAG), and context-aware prompts.

Generated TypeScript Output:

@Injectable()

export class UserService {

constructor(private readonly userRepository: UserRepository) {}

async getUser(id: number): Promise<User | null> {

const user = await this.userRepository.findById(id);

return user ?? null;

}

}This migration included:

- Added async to getUser method. Made return type a Promise<User | null>.

- Used await to properly handle the asynchronous call to findById.

- Converting to a constructor-based dependency injection.

- Using null coalescence to replicate Optional.orElse(null).

4. CI/CD Integration for Output Validation

Every generated file was validated through automated pipelines using GitHub Actions, ESLint, and Prettier.

CI Step Example:

- name: Lint & Format Check

run: |

npx eslint migrated/**/*.ts

npx prettier --check migrated/**/*.tsThis enforced:

- Type-safe, properly formatted outputs

- No broken builds due to bad transformations

- Faster developer reviews and trust in the distributed code

5. Jest Unit Test for UserService

import { UserService } from './user.service';

import { UserRepository } from './user.repository';

import { User } from './user.model';

describe('UserService', () => {

let userService: UserService;

let userRepository: UserRepository;

beforeEach(() => {

userRepository = new UserRepository();

userService = new UserService(userRepository);

});

it('should return a user when found', async () => {

const mockUser = new User(1, 'John Doe');

jest.spyOn(userRepository, 'findById').mockResolvedValue(mockUser);

const result = await userService.getUser(1);

expect(result).toEqual(mockUser);

});

it('should return null when user not found', async () => {

jest.spyOn(userRepository, 'findById').mockResolvedValue(null);

const result = await userService.getUser(999);

expect(result).toBeNull();

});

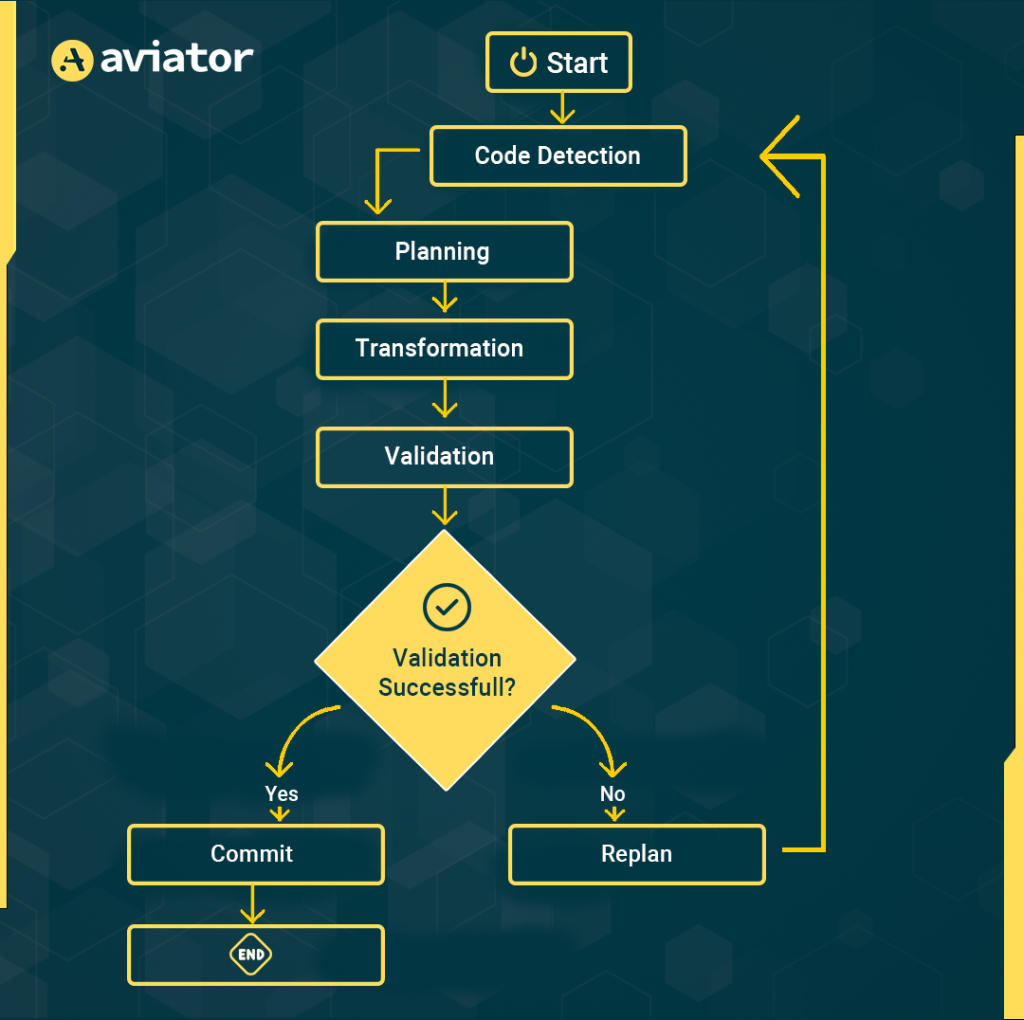

});Here’s a flow diagram explaining the migration process in brief:

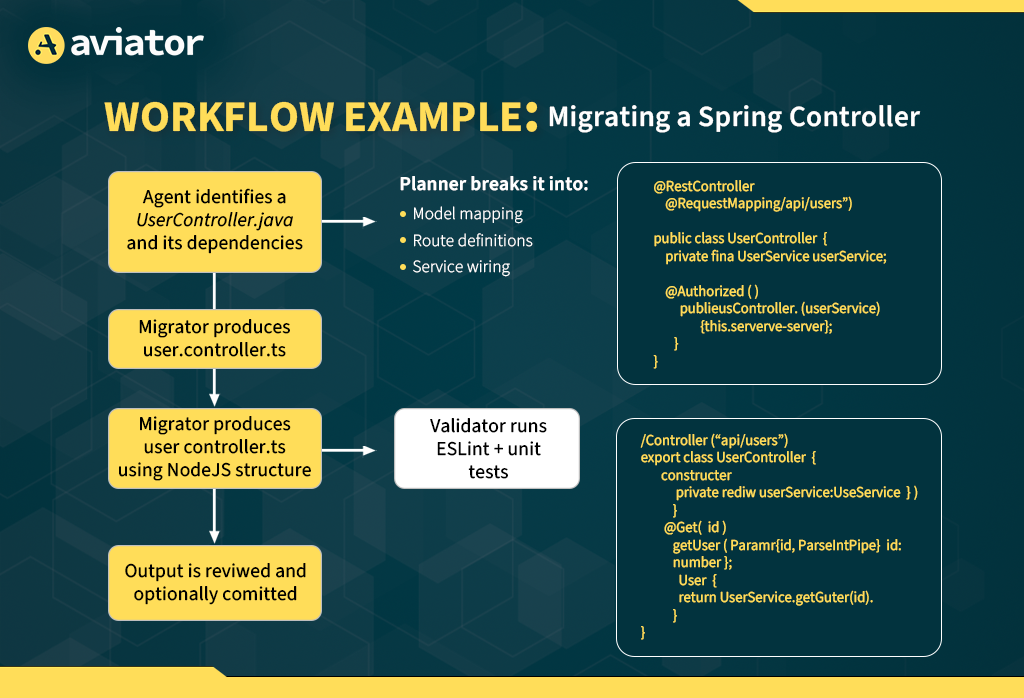

Workflow Example: Migrating a Spring Controller to TypeScript Locally

We had just finished working on a Spring Boot application and were diving into a new Node.js-based service. Our task? Migrate a Spring controller to a TypeScript Express controller. The challenge wasn’t just converting Java to TypeScript; it was doing it efficiently, with minimal effort, and entirely offline.

So, we turned to the power of LLM agents for the code migration process, something we’d recently been experimenting with. Our goal was clear: migrate the Spring controller and get the routes running in TypeScript wit h Express.

The Starting Point

Step 1: Install Required Tools

1. Install Ollama

Ollama lets you run code-optimized models locally.

- Download the Windows installer: https://ollama.com/download

- Once installed, open PowerShell or Command Prompt and pull a code-focused model:

ollama pull codellama:latestYou can also try deepseek-coder or mistral for stronger reasoning.

Step 2: Create Migration Workspace

mkdir JavaToTypeScript

cd JavaToTypeScriptInside, create folders:

/java-src <-- Your Java source code

/ts-output <-- TypeScript output from LLM

/prompt-scripts <-- Scripts to assist the LLMStep 3: Start a Local LLM Agent

In your terminal:

ollama run codellamaYou’ll enter a REPL-like interface where you can paste your prompts.

Manual Agent Prompt Workflow (Human-in-the-Loop)

Now you’ll guide the agent using structured prompts.

Example Prompt 1 – Setup for Migration

Prompt:

Migrate the following Spring Boot Java controller to idiomatic TypeScript using Express.js. Include the routes, request handling, and basic response formatting.We had a simple Spring controller , UserController.java , that looked like this:

// Before:

@RestController

public class MyController {

@GetMapping("/users")

public List<User> getUsers() {

return userService.getAllUsers();

}

@PostMapping("/users")

public User createUser(@RequestBody User user) {

return userService.createUser(user);

}

}I wanted to replicate this functionality in TypeScript using Express.js, but I didn’t want to spend hours rewriting everything.

The Solution: Using an LLM Agent Locally

After feeding the code into the prompt, the LLM instantly returned this:

import express from 'express';

import bodyParser from 'body-parser';

const app = express();

app.use(bodyParser.json());

app.get('/users', (req, res) => {

const users = userService.getAllUsers();

res.json(users);

});

app.post('/users', (req, res) => {

const user = req.body;

const createdUser = userService.createUser(user);

res.json(createdUser);

});

app.listen(3000, () => {

console.log('Server started on port 3000');

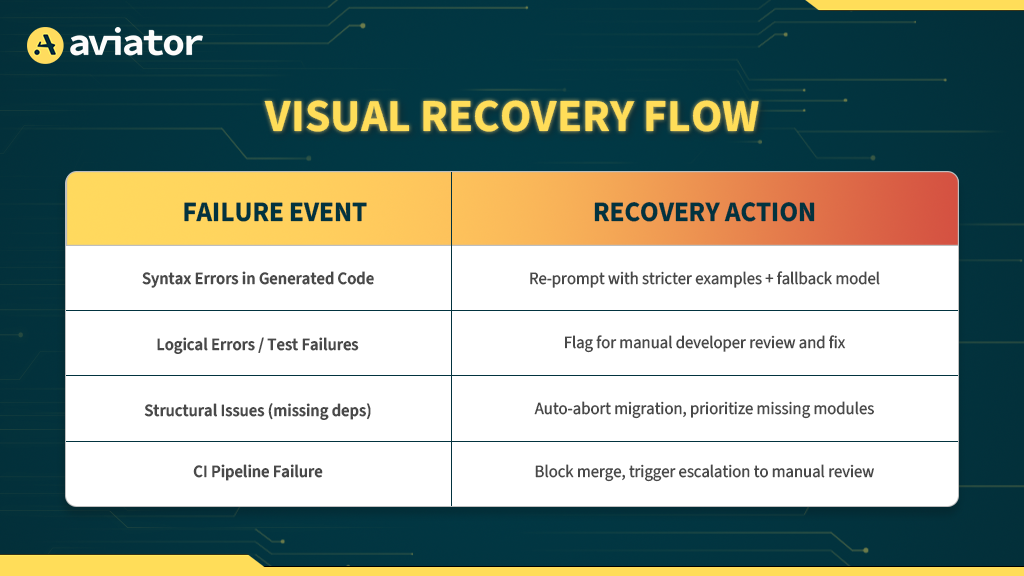

});Error Handling and Recovery Mechanisms

Even with careful planning and CI integration, agent-driven code migrations can encounter errors, misinterpretations, or structural issues. To make the system resilient, we implemented multi-layered fallback and recovery strategies.

Fallback Models

- Secondary LLMs:

If the primary LLM (e.g., GPT-4) failed to generate a valid migration for a module, the system automatically retried using an alternative model (e.g., Claude 3 or a fine-tuned variant). - Prompt Adaptation:

On first failure, the agent adjusted its prompt by injecting additional examples, stricter syntax rules, or scaffolding templates before retrying generation.

Manual Intervention Triggers

- Confidence Thresholds:

Agents flagged migrations where token confidence scores, code diff coverage, or structural completeness were below threshold. These modules were marked for manual review. - Interactive Review Mode:

Developers could step in at flagged points, review the agent’s suggestions, and manually edit or approve before committing the code. - Abort & Retry Commands:

If the planner detected cascading failures in dependent modules, it could abort the batch migration, fix upstream issues, and rerun the sequence.

Re-validation and Safety Nets

- Full Regression Testing:

After every major module migration, full regression suites (unit + integration tests) were triggered to detect runtime failures immediately. - Shadow Deployments:

New TypeScript services were deployed alongside existing Java services during early stages. This allowed safe testing with production traffic mirrors without risking downtime. - Version Control Safety:

Every migration step created an isolated feature branch. Failed branches were never merged until passing validation, ensuring mainline stability.

The Result

In just a few seconds, I had a working TypeScript Express controller , nearly identical in function to the original Spring controller. All that was left was some minor tweaking (like handling middleware for req.body) and running tsc –noEmit to validate everything.

It felt like I had just unlocked a productivity cheat code. With a little cleanup, my Spring controller was now running smoothly in TypeScript.

Conclusion

LLM agents show real promise in tackling the gritty work of code migration, , especially in large, legacy monoliths. Following the best practices of code migration tools can significantly improve the process. While they’re not a silver bullet, they offer developers a serious upgrade over manual refactors.

By breaking migration down into repeatable tasks, reading code, planning transformations, and validating output, these agents act more like reliable copilots than generic assistants.

With the right guardrails in place (prompt engineering, validation layers, RAG), you can use LLM agents to:

- Accelerate module-wise rewrites

- Maintain consistency across migrated layers

- Cut down cognitive load for devs handling cross-language rewrites

The Java-to-TypeScript case study shows that pairing LLM agents with existing CI tooling can make gradual migrations scalable, testable, and collaborative.

FAQs

1. Can LLMS Fully Automate Code Migration?

No, at least not today. LLMs can handle a large part of the migration, especially for boilerplate or stateless code. But nuanced business logic, security edge cases, and system-specific constraints still need human review.

2. What if the Agent Misinterprets the Logic?

That happens, especially with deeply nested abstractions or unconventional patterns. Our approach included fallback review loops, where developers manually validate and patch agent output before merging.

3. Is This Production-ready?

It depends. The workflow is production-friendly when paired with strong validation (like TypeScript compilers, unit tests, and linters). But we recommend running agents in a CI-assisted dev environment, not directly on prod branches.

4. Do You Need to Train a Custom Model?

No. We used off-the-shelf LLMs (like GPT-4) paired with LangChain agents and RAG setups. For domain-heavy use cases (e.g., niche frameworks), fine-tuning or prompt injection using your own codebase may help, but it’s not mandatory.