Beyond Prompts: The Evolution of Developer-Agent Collaboration

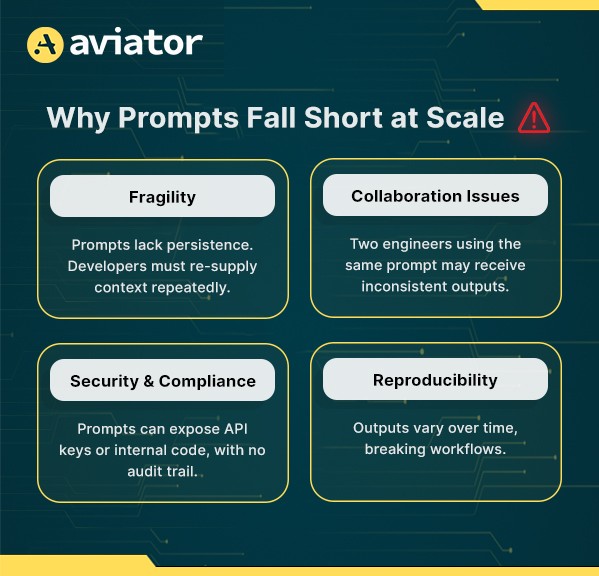

Prompts helped developers start collaborating with AI agents, but they quickly lead to fatigue, lost context, and inconsistent results. Spec-driven development with Runbooks replaces ad-hoc prompts with structured, repeatable workflows making collaboration reproducible, auditable, and scalable for real-world engineering.

TL;DR

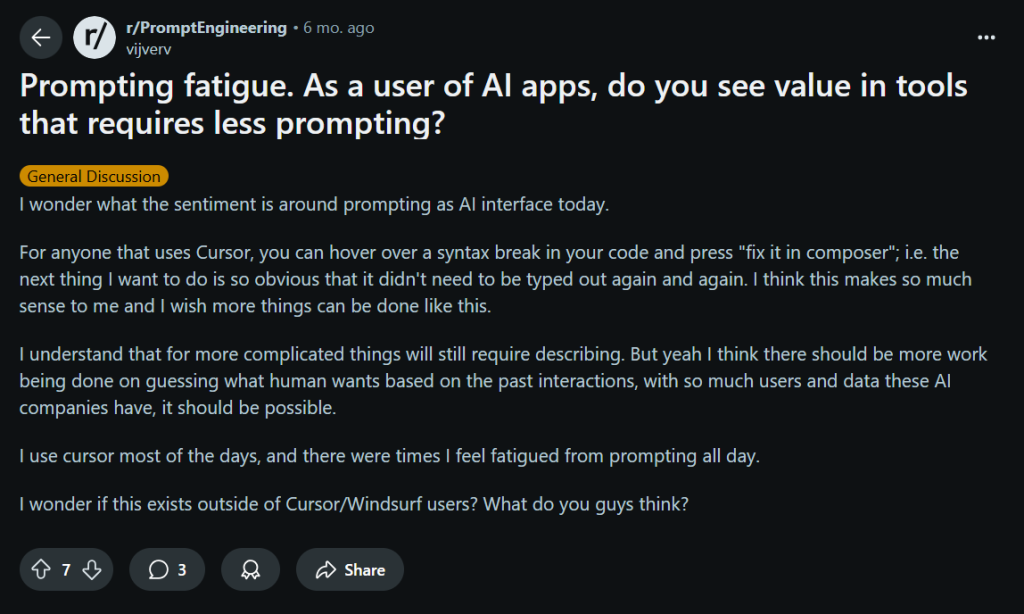

- Prompts were useful for jumpstarting developer agent collaboration, but they quickly break down at scale. They offer no persistence or reproducibility and provide little in the way of governance. Engineers often find themselves re-supplying the same context or endlessly rephrasing instructions just to coax the model into producing the expected result.

- Developers report experiencing “prompt fatigue,” inconsistent outputs among team members, and a loss of context across sessions. This makes collaboration brittle and unsuitable for enterprise workflows.

- Collaborative spec-driven development solves these issues by replacing ad-hoc natural language prompts with structured workflows. Runbooks are living documents that capture context from repositories and code reviews, combine it with a team’s AI-prompting knowledge and execution patterns, and improve with every use.

- Collaborative, spec-driven development with Runbooks enables teams to have a shared space for collaboration on prompts, align on execution workflows, and maintain a clear audit trail of decisions.

- Spec-driven development turns one-off agentic experiments into repeatable automation that teams can reuse and adapt.

Prompt fatigue is real! On Reddit communities like r/programming and r/ai_dev, threads frequently highlight frustrations with “prompt fatigue,” the tedious cycle of rephrasing inputs until an AI produces the desired output.

Others note that context is easily lost across sessions, requiring engineers to manually paste code, configuration files, or test cases just to keep the agent on track. The result is a brittle workflow that breaks under the pressure of real-world software complexity.

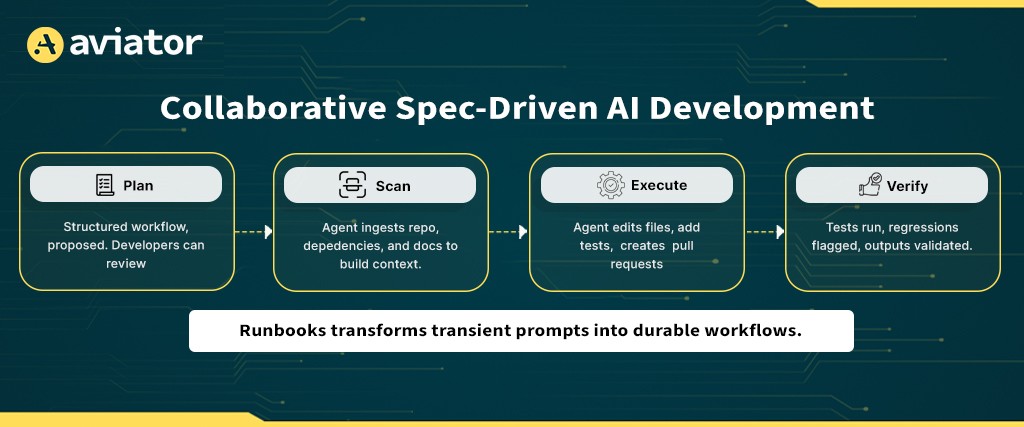

This is where Collaborative Spec-Driven AI Development comes in. Instead of prescribing how an agent should behave through carefully worded prompts, developers define what outcome needs to be achieved in the form of structured workflows. These workflows serve as specifications that agents can execute, scanning a repository, planning changes, making modifications, and verifying the results. The result is a persistent, versioned artifact that is auditable, reproducible, and adaptable across projects.

Runbooks are one concrete implementation of this idea. Runbook is a concept we’ve been developing to turn AI-assisted coding from a single-player activity into a multiplayer one.

Runbooks give teams a shared space to collaborate on prompts, align on execution workflows, and maintain a clear audit trail of decisions. Think of Runbooks as the missing link between product specifications and code. A runbook isn’t just documentation; it’s a living knowledge base.

Background: Prompt-Based Agent Interaction

Prompts remain the most common interface for AI agents, prompts are natural language instructions refined through techniques such as zero-shot, few-shot, or chain-of-thought prompting. Their accessibility made them appealing early on; any developer could write a prompt and immediately accelerate routine tasks without new tooling.

Yet prompts are inherently brittle. They lack version control, persistence, and auditability. Developers often describe having to paste the same snippets into every session, only for the agent to drift after a few exchanges. Collaboration amplifies the problem: two engineers can provide near-identical prompts and receive outputs that differ in structure, naming conventions, or test coverage. Integrating these inconsistent results into a shared repository creates fragmentation and technical debt.

Security concerns further complicate prompt-driven workflows. Because prompts often include sensitive information, such as API keys, schemas, or internal code, they risk exposing data without leaving a traceable record. Unlike artifacts stored in source control, prompts provide no audit trail, making policy enforcement and peer review nearly impossible in regulated environments.

These weaknesses illustrate why prompting alone cannot sustain enterprise-scale adoption. It served as a bootstrap for early exploration, but as collaboration and compliance demands grow, developer–agent interaction requires a more standardized approach.

Collaborative Spec-Driven AI Development

Collaborative, Spec-Driven AI development transforms fragile prompts into structured workflows. Instead of endlessly re-explaining context, developers define what outcome needs to be achieved, and agents execute it step by step. The workflow follows four core stages:

Core Workflow

1. PLAN

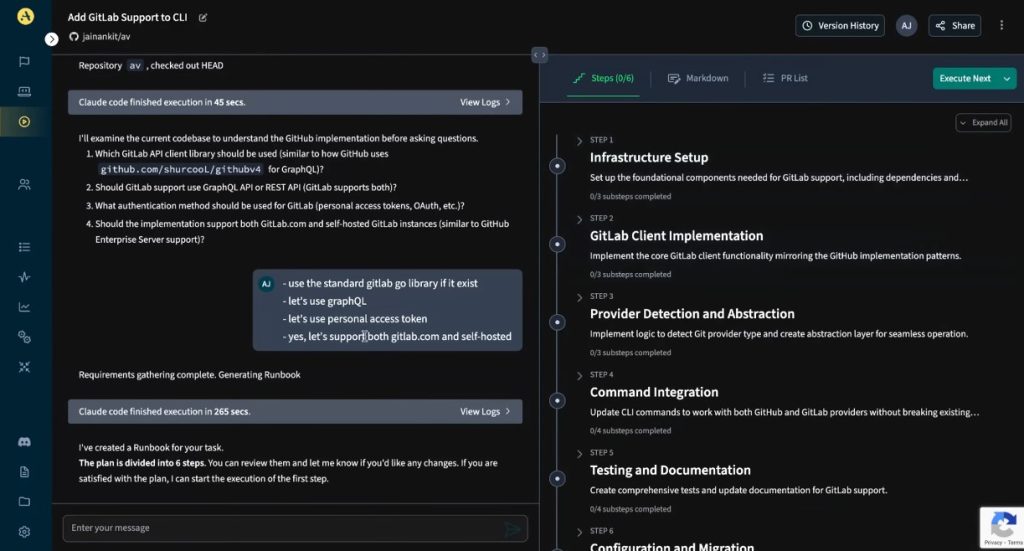

The workflow begins with planning. Developers or teams define the desired outcome, such as migrating a logging library or adding a new API endpoint. The agent translates this into a structured plan of steps. Developers can review and adjust the plan before execution, creating a feedback loop that ensures reliability and alignment with team standards.

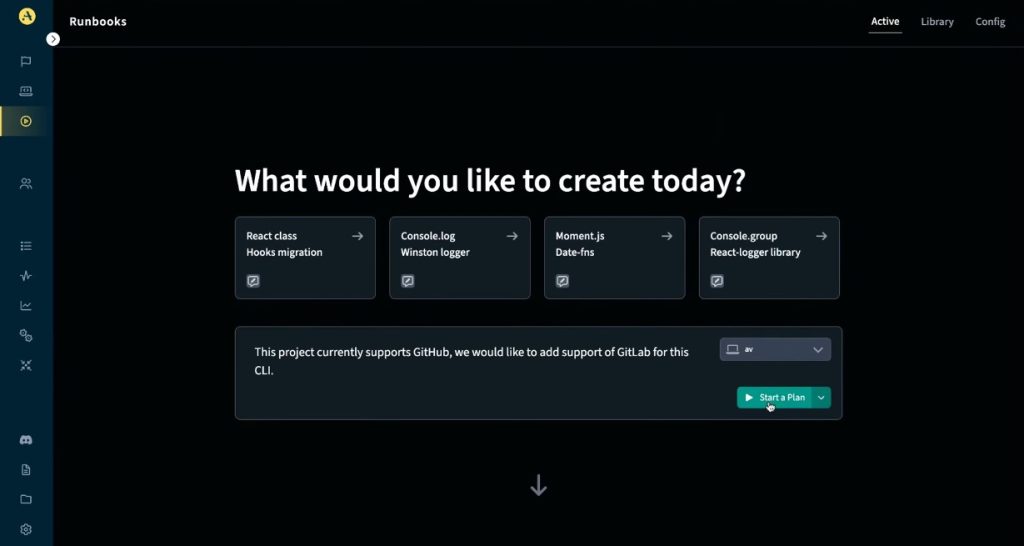

The Actions tab lists available Runbooks, either out-of-the-box templates or those created by teammates, providing a shared library of workflows.

2. SCAN

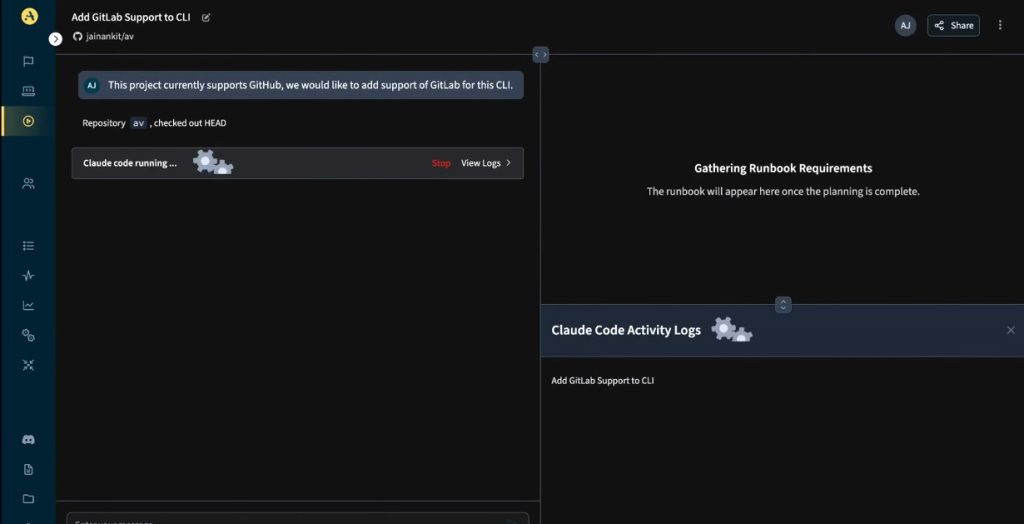

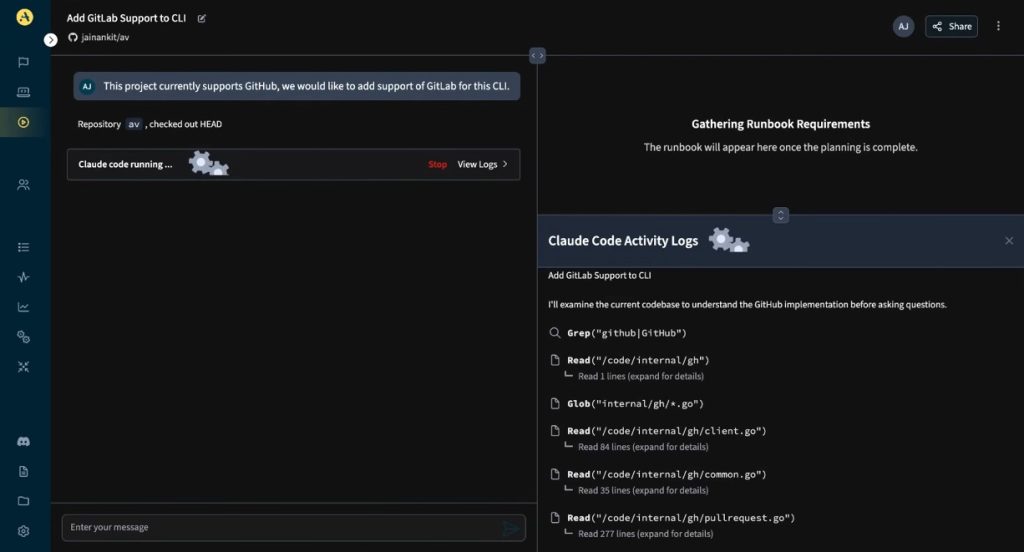

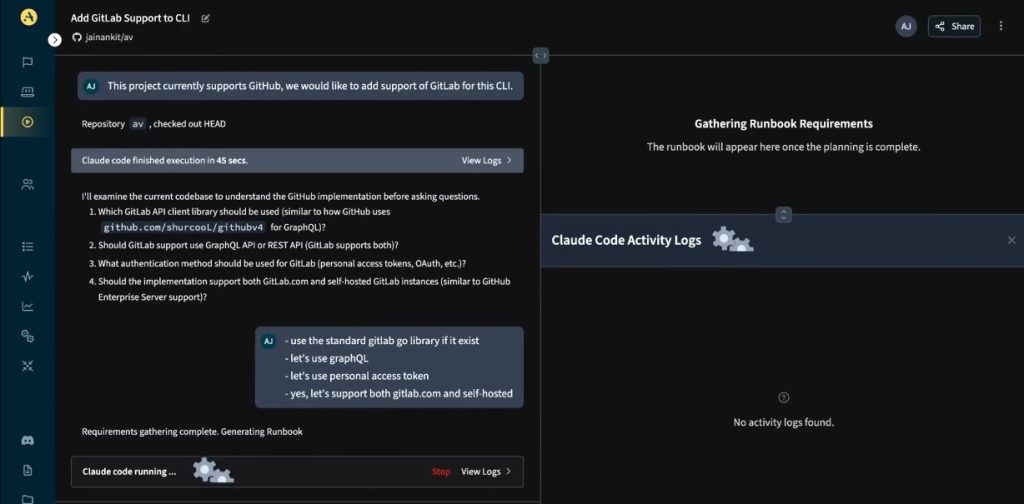

the agent ingests project code, documentation, and dependency metadata to build a contextual understanding of the repository. Unlike prompts, which require manual injection of snippets, Runbooks automate this discovery process. Agents can even pause to ask clarifying questions, ensuring they have the right information before making changes.

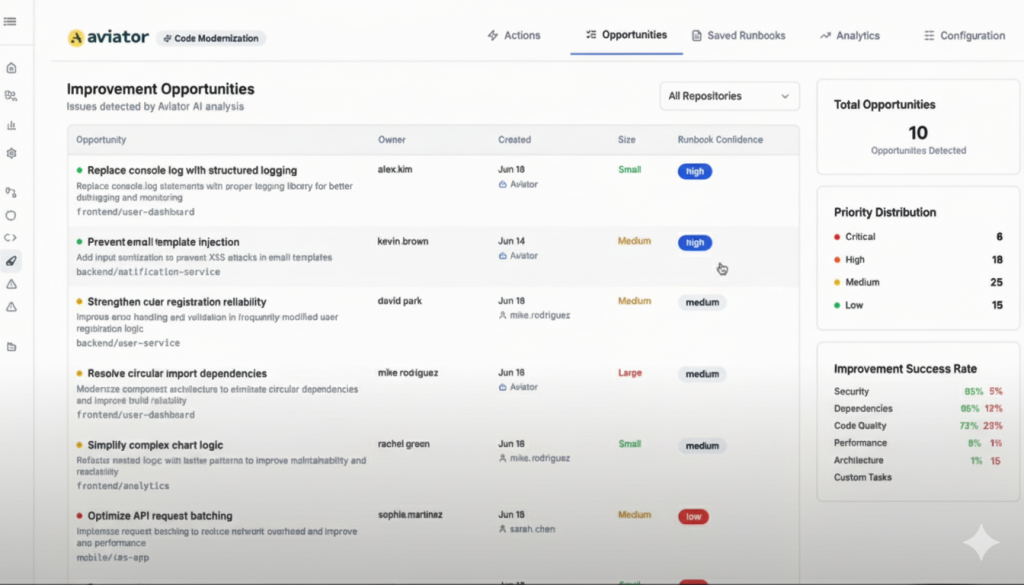

Runbooks begin by scanning repositories and configurations. Here, the dashboard displays how agents automatically identify opportunities to enhance the codebase. It then asks some questions to match the requirements.

3. EXECUTE

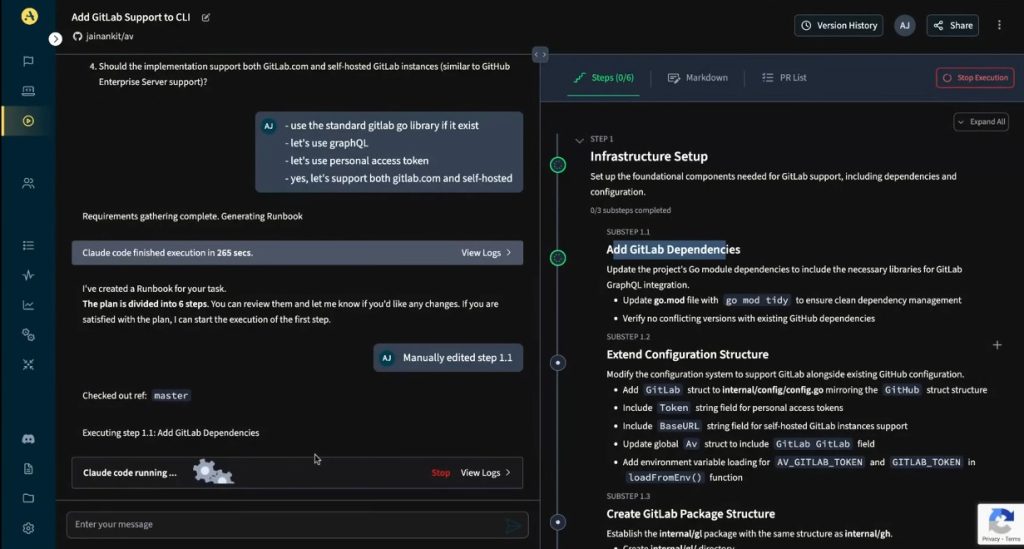

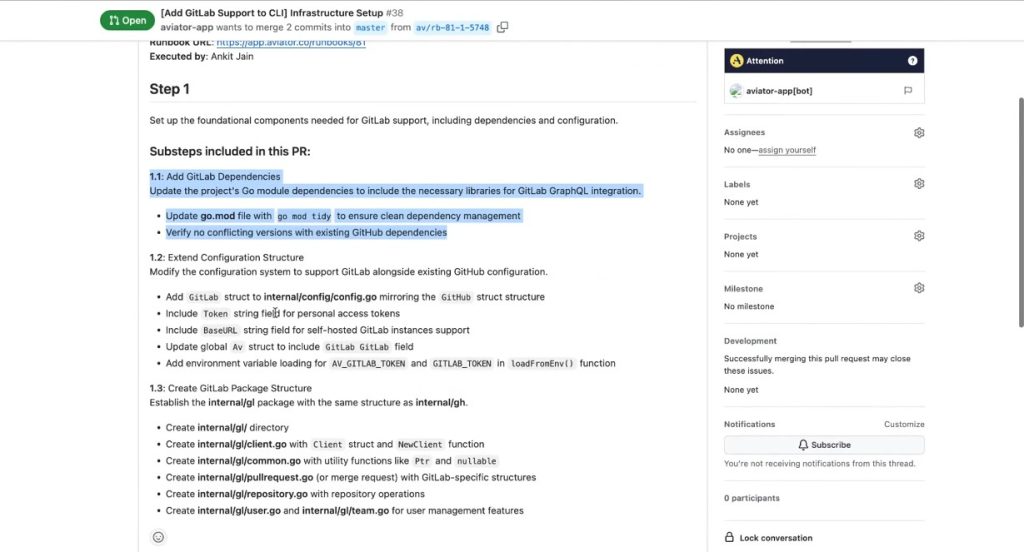

The execution stage is where the agent carries out changes, editing files, adding tests, or generating pull requests. Execution can run step-by-step, allowing humans to approve each modification, or in full automation mode when confidence is higher.

Another practical detail is that Runbooks can automatically create pull requests directly from the configuration page. Instead of switching contexts or triggering workflows through a separate interface, developers can configure and launch a spec-driven development workflow that generates a PR in one step, reducing overhead and aligning with existing review practices.

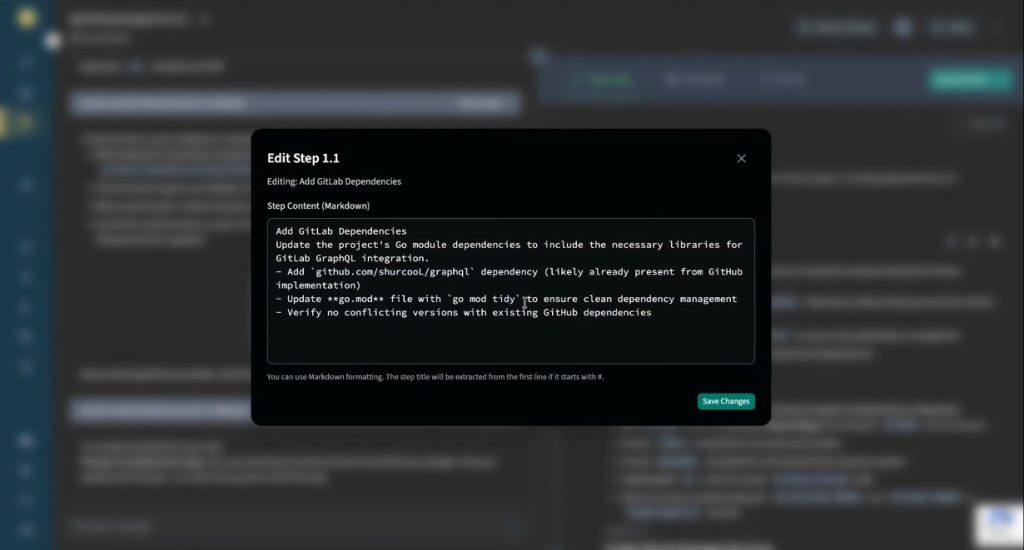

You can analyze each step and then give permission to execute the next one, or you can open a particular step and edit the context if needed.

4. VERIFY

Finally, verification ensures that the outputs are correct. Agents run test suites, validate consistency, and flag any regressions before changes are merged. This step closes the loop, transforming what would have been a brittle prompt into a deterministic workflow.

Developers can dry-run a Runbook step-by-step to validate correctness before allowing agents to run autonomously

Finally, you can easily verify the pull request, and it adds all the necessary details, such as “executed-by” and “runbooks url”.

Enterprise Enablers

To support real-world adoption, Runbooks includes capabilities beyond the workflow itself:

- Context awareness: Agents draw on documentation, style guides, and dependency graphs to stay aligned with project standards.

- Secure execution: Workflows can delegate to self-hosted agents, keeping sensitive code within enterprise infrastructure.

- Preconfigured environments: Agents start with the right libraries and tools, reducing setup overhead.

- Model flexibility: Teams can bring their own agents, such as Claude Code, Gemini, Aider, and Codex, rather than being locked to a single backend.

Runbooks can be inspected and edited directly in markdown format, allowing developers to refine steps before execution.

Example Spec-driven development project: Refactor Legacy Logging to OpenTelemetry

Spec-driven development enables a repeatable logging migration that can be versioned and shared across services.

runbook:

name: migrate-logging-to-opentelemetry

steps:

- scan:

repo: ./services

files: ["**/*.js", "**/*.ts"]

- plan:

description: >

Replace all instances of console.log and legacy Winston logger

calls with OpenTelemetry structured logging.

- execute:

actions:

- search_replace:

patterns:

- match: "console.log"

replace: "otelLogger.info"

- match: "winston.*"

replace: "otelLogger"

- add_dependency: "@opentelemetry/api"

- update_file: telemetry/initLogger.js

- add_tests: tests/loggingRefactor.test.js

- verify:

run: npm test2. Spec-Driven Development Workflow Generates a PR

Legacy console.log and Winston calls (left) replaced with OpenTelemetry structured logging (right). The PR links back to the spec-driven development that created it, ensuring traceability and reproducibility.

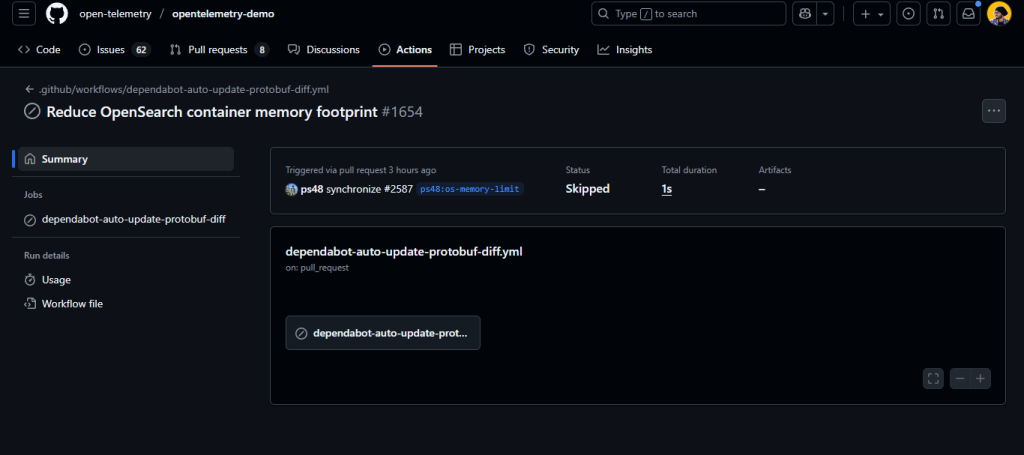

3. Workflow Execution in CI/CD

The Runbook is executed via GitHub Actions, leaving an auditable record of trigger, status, and workflow details. Every execution is logged and reproducible as part of the CI/CD pipeline.

Impact of Spec-Driven Workflows on Developer Experience

By structuring collaboration around specifications, developers move past the fragility of prompts. Instead of ephemeral instructions, teams gain workflows that are testable, auditable, and reusable. Each workflow becomes an artifact, versioned, shareable, and aligned with existing practices such as pull requests and CI/CD pipelines.

This shift feels natural for engineers because it mirrors how they already think in terms of automation pipelines. The difference is that agents are now first-class participants in those pipelines: scanning repositories, planning changes, executing modifications, and verifying results in a deterministic loop.

Positioning AI collaboration this way lays the groundwork for a deeper comparison between prompt-driven and spec-driven approaches, showing how developer–agent workflows evolve from brittle one-offs into reliable, team-ready systems.

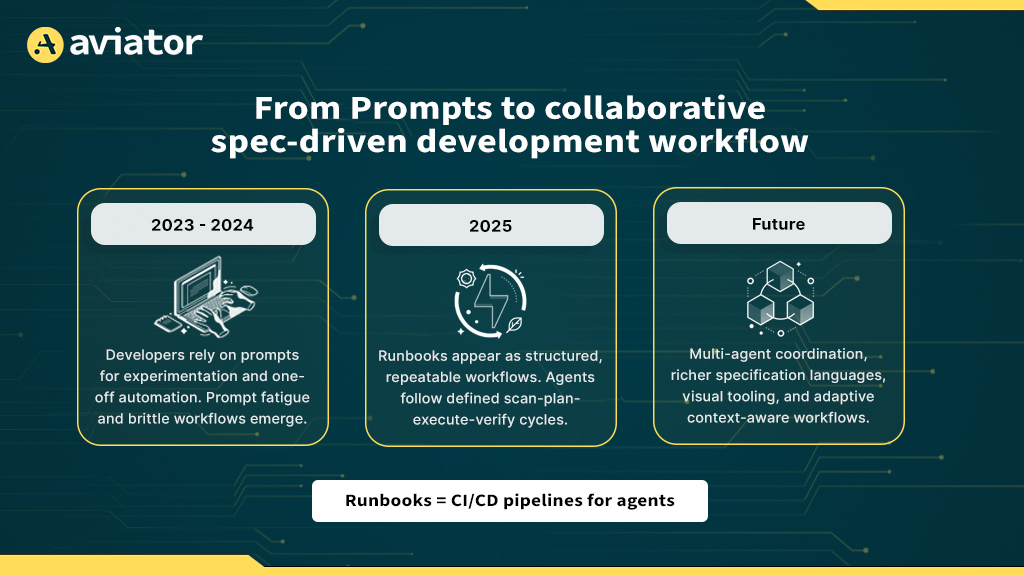

From Prompts to Spec-Driven Workflows: Key Changes

Replacing one-off natural language instructions with structured workflows introduces predictability, reproducibility, and governance. The differences show up most clearly across four areas:

1. Control Over Process

Prompts are transactional: you issue an instruction, receive an output, and iterate until it appears correct. That loop is fast but unpredictable. Spec-driven workflows, on the other hand, follow a defined sequence of scan, plan, execute, and verify. Developers can decide whether to keep humans in the loop for approvals or allow agents to act autonomously when confidence is high.

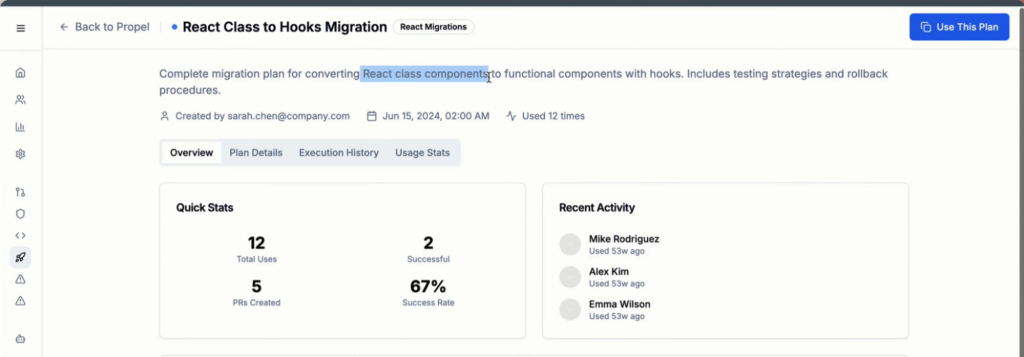

2. Reusability and Reproducibility

The dashboard surfaces potential migrations as opportunities, complete with size and confidence level indicators, giving developers visibility before execution.

Prompts are hard to reuse. An instruction that works today may fail tomorrow as models evolve or context changes. Spec-driven workflows, by contrast, are artifacts: versioned, shareable, and testable. They can be stored in source control, peer-reviewed like code, and run reliably across different environments.

3. Context Propagation

Prompt-based workflows depend on developers repeatedly pasting code, logs, or documentation into the session. The model’s memory resets, forcing teams to restate the same details. Spec-driven workflows embed context by design: agents scan repositories, reference dependency graphs, and operate within persistent environments. With context built in, agents don’t start from scratch on every interaction.

With context built in, agents are no longer starting from scratch with every interaction.

4. Collaboration

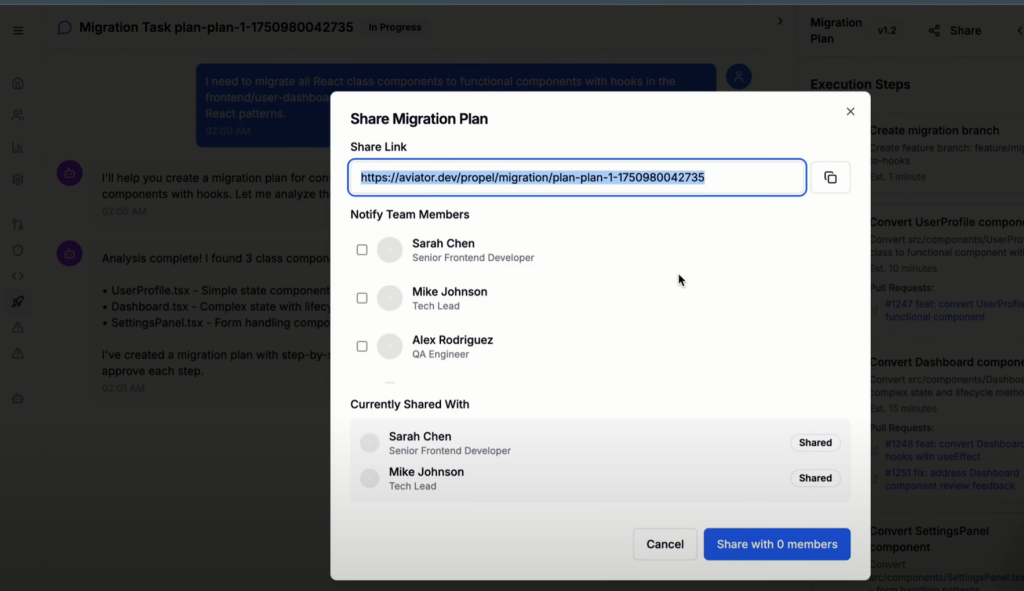

Runbooks generate pull requests that teams can review and comment on, embedding collaboration directly into the workflow. Runbooks can be shared directly with teammates, reinforcing collaboration and review workflows

Prompts are highly individual; two developers can phrase the same request and get inconsistent results. This fragmentation makes collaboration painful, since there’s no shared artifact to review or refine. Spec-driven workflows transform collaboration into a first-class process, enabling teams to review plans, comment on pull requests, and iterate together on workflows that remain consistent across executions.

Together, these changes illustrate why spec-driven workflows are more than an incremental improvement. They formalize the developer-agent relationship in a way that prompts cannot, preparing teams for scalable, secure, and reproducible collaboration.

To see how these changes play out in practice, we now turn to real-world use cases where collaborative spec-driven development demonstrates clear advantages.

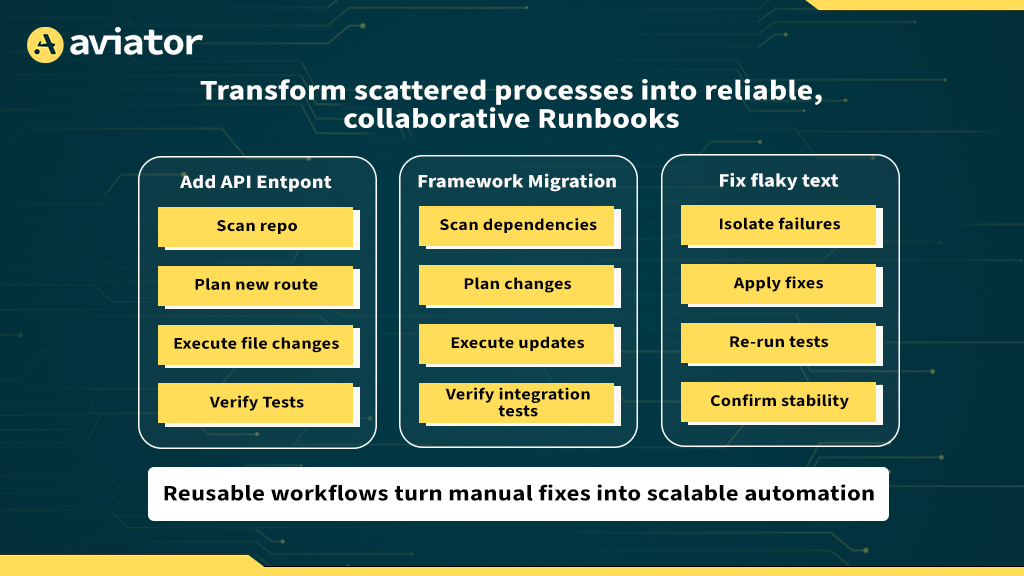

Transform scattered processes into reliable, collaborative Runbooks

1. Adding a New API Endpoint

Consider the task of introducing a new /status endpoint in a service. With prompts, the agent might generate controller logic or route definitions, but developers still need to verify file placement, adhere to conventions, and ensure test coverage. Collaborative spec-driven development formalizes this into a multi-step artifact: scanning the repository for existing routes, planning the new controller, creating files, updating routes, and adding tests. The process is repeatable, meaning that if the same endpoint is needed in a different service, the spec-driven development workflow can be reused with minimal changes.

2. Framework or Language Migrations

Example Runbook for Converting React Class Components into Functional Components with Hooks: A Common Migration Scenario.

Upgrading from Express 4 to Express 5, or migrating from Python 3.10 to 3.12, is not a one-off task; it involves updating multiple files, handling deprecations, and running extensive tests. Prompts struggle here because each migration step requires context awareness and continuity across many files. Collaborative, spec-driven development can encapsulate this migration path into a series of planned steps, including scanning dependencies, applying targeted changes, executing code modifications, and verifying against integration tests.

3. Fixing Flaky Tests and Optimizing Build Times

Flaky tests often appear sporadically, requiring careful reproduction, thorough code inspection, and repeated runs. Prompts may generate patches, but they lack the discipline of systematically isolating variables. Collaborative spec-driven development allows teams to define workflows that isolate test failures, apply fixes, rerun tests, and confirm stability. Similarly, for optimizing build pipelines, spec-driven development can automate profiling, suggest dependency adjustments, and validate improvements across multiple runs.

Having seen how collaborative spec-driven development applies to everyday developer tasks, the next step is to compare them directly against prompt-based tools to highlight where each model fits best.

Comparative Analysis

| Dimension | Prompt Tools / Copilots | Collaborative spec-driven development |

| Fit in workflows | Ideal for quick prototyping, one-off tasks, or exploratory coding where speed matters more than consistency. | Designed for structured, repeatable, multi-step tasks such as migrations, refactoring, and team-wide automation. |

| Strengths | Flexible, fast iteration, minimal setup overhead; useful for generating boilerplate or exploring options. | Reproducible, auditable, and collaborative; integrates naturally with CI/CD pipelines and enterprise workflows. |

| Weaknesses | Brittle, lacks state, difficult to scale across teams; no guarantees of repeatability. | Requires upfront investment in defining workflows and integrating with infrastructure. |

| Collaboration model | Individual developer + ad-hoc sharing of outputs; limited visibility or review cycles. | Shared artifacts under version control; explicit planning, review, and feedback loops for team collaboration. |

| Trade-offs | Maximizes speed and flexibility, but at the cost of safety, governance, and reproducibility. | Prioritizes safety, control, and scalability, sometimes at the expense of spontaneity and quick iteration. |

Both approaches have their place. For quick experiments, prompts are hard to beat. But as soon as workflows need to be shared, audited, or repeated, spec-driven workflows become the superior choice.

With this comparison in mind, the next logical step is to explore how teams can effectively implement spec-driven workflows, starting small and scaling to enterprise workflows.

FAQs

1. What is spec-driven development?

Spec-driven development is an approach to working with AI agents where developers define the desired outcome instead of prescribing how to achieve it through prompts. The specification is captured as a structured workflow, often referred to as a Runbook, that agents can follow deterministically. This makes the process reproducible, auditable, and shareable across teams, unlike prompt-driven interactions, which are brittle and inconsistent at scale

2. What is context engineering?

Context engineering is the practice of defining and supplying the right information an AI agent needs to perform a task correctly. Instead of manually pasting snippets of code or documentation into prompts, context engineering automates the process: scanning repositories, referencing dependency graphs, and applying project-specific style guides or policies. In spec-driven development, context engineering is built into the workflow itself, ensuring agents act with a full, consistent understanding of the environment. This reduces prompt drift and makes outputs reproducible across runs and teams.

3. What are remote agentic environments?

Remote agentic environments are cloud- or server-hosted execution spaces where AI agents operate with persistent context. Instead of running locally and losing state between sessions, agents work in remote environments preloaded with project code, dependencies, and tooling. This persistence allows workflows to carry context across runs, reducing prompt drift and repetitive setup.

4. Why do developers need spec-driven workflows instead of relying on prompts?

Prompts are useful for quick experiments, but they often collapse under the complexity of real-world software. They lack persistence, reproducibility, and shared artifacts, making it difficult for teams to collaborate or enforce standards. Spec-driven workflows solve this by turning one-off instructions into structured artifacts.

With a workflow in place, teams can:

- Reuse the same process across projects or services.

- Review and audit workflows, such as code, to ensure governance and compliance.

- Share context automatically, so engineers are not manually re-supplying snippets in every session.

- Integrate workflows with CI/CD systems, making agents part of the development pipeline.