Flaky tests: How to manage them practically

Automated testing is a standard practice in professional software development teams. As developers, we rely on a suite of tests to validate that the code we’re writing is doing what we expect it to do.

Without tests, we’re flying blind. We think that the code is doing what we intended it to do, but we can’t be certain. To be honest, we can’t even be 100% certain with tests because they rarely cover all edge cases, but the total number of bugs is usually much lower with a good test suite.

As long as the test suite runs successfully, everything is good. I add more code and more tests and developer life is good. But what to do if one of the tests sometimes fails and sometimes not?

I have a flaky test on my hands. How I hate it when that happens! After the initial rush of rage toward the author of that test is over (because I found out that I wrote the test myself a month ago) the first coherent thought is “how can I fix this flaky test?”.

That’s what we’re going to explore in this article. We’re going to discuss what makes flaky tests so dreadful and then dive into options of how to deal with them. As usual, each of those options has its trade-offs.

What’s a flaky test?

Before we dive deeper, let’s discuss what we’ll consider a flaky test in the context of this article and how we discover them.

We define a flaky test as follows:

A flaky test is a test that sometimes passes and sometimes fails in the same environment.

In a common workflow, every time we change some code, we run a suite of automated tests on our local machine before we push the code changes to a remote Git repository. From there, usually, a continuous integration (CI) pipeline picks up the changes and runs the tests again before the code is packaged for production deployment.

That means the tests are executed in a local environment and a CI pipeline. A flaky test sometimes fails and sometimes passes in the same environment:

| Local run | CI run #1 | CI run #2 | CI run #3 | |

| Test 1 | 🟢 | 🟢 | ❌ | 🟢 |

| Test 2 | 🟢 | ❌ | 🟢 | 🟢 |

| Test 3 | 🟢 | 🟢 | 🟢 | 🟢 |

In the example above, tests 1 and 2 pass locally, but they sometimes fail in the CI pipeline. Only on the third attempt did all tests pass in the CI pipeline.

A test may also be flaky in the local environment:

| Local run | CI run #1 | CI run #2 | CI run #3 | |

| Test 1 | ❌ | 🟢 | ❌ | 🟢 |

| Test 2 | 🟢 | 🟢 | 🟢 | 🟢 |

| Test 3 | 🟢 | 🟢 | 🟢 | 🟢 |

In this case, test 1 has failed once locally. After we ran the test suite again it passed locally, only to fail again in the first CI build. This case is less common than the previous case, however, because if the flakiness is reproducible locally, it’s much easier to fix and is usually fixed even before the code is pushed.

If a test always fails in a certain environment, it’s not a flaky test, but a broken test:

| Local run | CI run #1 | CI run #2 | CI run #3 | |

| Test 1 | 🟢 | 🟢 | 🟢 | 🟢 |

| Test 2 | 🟢 | ❌ | ❌ | ❌ |

| Test 3 | 🟢 | 🟢 | 🟢 | 🟢 |

In this example, test 2 is running locally, but it’s always failing in the CI environment. This test is broken, not flaky. It’s the poster child for the meme “it runs on my machine”. The tactics for flaky tests we’re going to discuss below should not be applied to broken tests! Instead, fix the test! It has probably some dependency on the host it’s running on that needs to be removed.

Flaky tests are wasting time and money!

From a developer’s perspective, it’s rather obvious that flaky tests are evil. But if you’re not a developer or you need to convince someone that it’s a good idea to fix them, this section is for you.

So, why are flaky tests so evil? Because they’re wasting developer time (and that means money).

Let’s look at a common pull request workflow where one developer raises a pull request with their changes and another developer reviews and approves the changes before they are merged into the main branch. To make sure that we’re not pushing bad changes into production, the test suite is executed at different times in this workflow:

- locally, before the developer pushes the changes

- in CI, on the feature branch, the developer has pushed the changes to

- locally, after the developer has made changes after a pull request review

- in CI, on the feature branch updated with the new changes

- in CI, on the main branch, after the pull request has been merged

That means that in a very common workflow like this, the test suite is executed at least 5 times! And if only one test in that test suite fails, the whole build will fail, because we don’t want to push potentially bad changes into production!

Let’s do the math. Say we have one flaky test that fails about 10% of the time and a build takes 2 minutes (I’m optimistic here!). That means each of the 5 steps has a 90% chance to pass. Each step has a 10% chance that we need to rerun the test suite and wait 2 minutes. That means on average, we’d have an additional wait time of 120/10 = 12 seconds per step or 12 * 5 = 60 seconds in total. Let’s say a developer does this 5 times a day on average. That’s 5 minutes lost per day per developer.

5 minutes of additional wait time per day and developer doesn’t sound too bad. But, every time a developer has to wait for something, they are tempted to multitask. They will start working on something else to fill the time. That means they lose focus and have to switch contexts. Refocusing on a piece of work easily multiplies the amount of time needed by a significant factor. And that is with a flaky test that only fails 10% of the time!

The equation above is only an example. It’s different for everyone depending on the project context and the workflow of a developer. But note that many test suites take much longer than 2 minutes to execute and that this is a very optimistic example! It happens to every developer every day, and the delays in context switching quickly add up to hours and hours of wasted time. And we haven’t even talked about the frustration this causes for the developers!

Instead of living with flaky tests, we should do something about them as soon as possible! Let’s look at some options.

Tactic #1: Delete the flaky tests

The easiest way out is, of course, to delete the flaky tests. Tests can’t fail when they’re not running!

Deleting tests seems like a very bad idea, and more often than not, it is. But if the value of a test is questionable and it’s causing overhead by being flaky, deleting the offending test may be a valid decision to make.

But when is a test valuable and when isn’t it? That’s a question that can only be answered in the context of a given project. Discuss it with the team and use your best judgment.

Tactic #2 Disable the flaky tests

Usually, however, we don’t want to delete a flaky test, because it provides some value to us. When it’s green, we know that a certain feature is working as expected, after all.

To unblock the CI pipeline, we can disable the flaky tests. In the Java world, for example, we can do this by annotating a test with the @Disabled annotation, and JUnit will not run these tests. Test frameworks in other languages provide similar features.

Disabling a test is only better than deleting a test if we actually fix it later, however. Otherwise, the test is a liability like any code that is not executed. Over time, the production code changes and the disabled test will most likely not be maintained. That means that if the test is run in the future, it’s probably not going to be just flaky anymore, but broken and flaky.

We can have the best intentions about going back to fix this disabled test in the future. We can put it in an “eng health” backlog or similar. But, it turns out, there are always more urgent things to do. So if you don’t trust yourself or your team to actually go back and fix a test that you disabled for the “short term”, then this is probably not a route that you should take.

Tactic #3 Mark the flaky tests

Slightly better is the option to disable a flaky test and then mark it as a flaky test. In Java, we could create an annotation @Flaky , for example, that marks a test as being flaky. If it fails, a developer will know directly that it is a flaky test. Instead of wasting time investigating the test failure, they will know that they just need to rerun the test and it will pass eventually.

Note that – while still not satisfactory – this is much better than simply disabling a flaky test! The developer that is running into this test failure saves minutes or even hours investigating the test failure. Someone else has probably already done the investigation (albeit unsuccessfully) and declared this test flaky. Just re-run the test already!

Tactic #4 Mark the flaky tests automatically

Manually marking tests as “flaky” is usually better than disabling a flaky test, but it still requires a manual step. At least one developer has to invest the time needed to identify and mark the flaky test.

If you’re regularly fighting flaky tests (for example if you’re working on a big legacy codebase), it might pay off to automate this process. And what better place to automate this than in the CI pipeline?

In the CI pipeline, the tests are executed regularly. So the CI pipeline should know which tests are failing intermittently and can mark them as flaky tests. It can prepare a “flaky test report” for us which shows exactly which tests have failed and how often and are considered flaky. It might even provide the option to automatically re-run the failed flaky tests.

It’s not straightforward to identify flaky tests from the viewpoint of the CI pipeline, though. A test may fail because a developer has broken it, for example. And it might be fixed with the next

commit, then broken again in the next commit. We wouldn’t consider this test flaky if we have worked on the code and broken and repaired it a couple of times. After all, the test failed for a valid reason. So, the CI pipeline would have to take the commit history into consideration … and even then, it will probably find a false positive here and there.

Tactic #5: Rerun flaky tests

This brings us to the obvious solution of handling flaky tests: when they fail, we can just re-run them. If a test fails in the CI pipeline, we re-run the whole pipeline or – if the CI tool supports this – we re-run only the build step that executes the tests to save some time.

As the back-of-the-napkin calculation above shows, simply re-running flaky tests is not great. The developer will be interrupted in their flow and will probably start working on something else. Then, when “something else” is finished, they will turn back to the flaky test and spend time getting back into context.

So, this is not a good option, either.

Tactic #6: Dedicate a build step for flaky tests

An improvement to just rerunning the build pipeline is to move all known flaky tests into a dedicated build step – and then re-run only that build step manually if it fails.

Flaky tests train us to just hit the “re-run” button when a test has failed, without looking at the test failure itself. Only after the second or third time we hit the “re-run” button, we might notice that a non-flaky test has failed and we should fix it. We have just lost multiple build-cycles worth in time needlessly!

By moving the flaky tests out of the build step that executes all the other (non-flaky) tests, that build step will have a better chance of running successfully. If it does fail, it almost certainly means that there is a real test failure, and re-running the build step will not fix the issue. This will trigger us to look at the test result after the first failure instead of just trying to re-run the build step a couple of times.

The dedicated “flaky test build step” may still fail due to flaky tests, of course. If it does, we can still hit the “re-run” button. Since there are hopefully only a few flaky tests, re-running this build step will take much less time than re-running the build step executing all other tests. Maybe it’s fast enough that the developer doesn’t get bored and doesn’t start doing something else, saving precious minutes of context-switching.

Tactic #7: Rerun flaky tests automatically

A dedicated build step for flaky tests is well and good, but we still need to re-run flaky tests manually, which takes our attention and time. How about an automated retry mechanism?

Once we have identified a test as being flaky we can simply wrap it in an exception handler like this:

int maxAttempts = 3;

int attempt = 1;

while(true) {

try {

runTheTest(); // this call is flaky!

break;

} catch(Exception e) {

// bubble up test failure only after a couple of attempts

if(attempt == maxAttempts){

throw e;

}

}

attempt++;

} We just run the test in a loop and swallow the test failure. Only after a certain number of failed attempts will we publish the test failure to the test framework (by bubbling up the exception) so that it marks the test as failed. If the test has run successfully once, it will break out of the loop.

This is not the nicest code to write, so have a look around if the test framework you’re using supports re-running tests out of the box.

In the Java world, there is an extension to JUnit, for example, that allows re-running tests without writing ugly while loops:

@RetryingTest(maxAttempts = 3, suspendForMs = 100)

void runTheTest() {

...

}This extension even supports a wait time between attempts, which improves the probability of success when the test is flaky because of async code and race conditions.

In many cases, this is the best solution to “fix” a flaky test. We don’t need to invest much time in investigating the issue and we have fixed the symptom. It depends on the test, however. If lives depend on the functionality that the test is covering, then you’ll want to fix the root cause!

Tactic #8: Fix flaky tests

Say we have disabled some flaky tests and added some retries to other tests. How can we make sure that we’re going to fix them properly in the future? A common tactic is to have a dedicated “engineering health” backlog to put these tasks into.

To increase the probability of these tasks getting done, it’s always better to do them right now, of course. Putting them in a backlog often means that they won’t get attention in the future.

To effectively work with backlogs like this, the team needs to have an established habit of working through the backlog. For example, having a weekly rotating “eng health” role in the team. This person is not working on roadmap items but is instead responsible for working through the eng health backlog. Or having a regular “eng health” week, where the whole team works through that backlog.

If in doubt, however, fix things right now instead of putting them into a backlog.

Don’t let flaky tests control your day

Flaky tests can make our days miserable. Especially in old and crusty codebases, they can be intimidating. It’s tempting to just hit the “rerun” button and run the whole suite of tests again instead of spending the time for one of the more effective tactics like automatically re-running them or fixing them for good.

Think of the time you can save for yourself, future you, and your team if you invest some extra time to “unflake” the test! Given the right circumstances, we don’t even need to fix the root cause, but we can automatically have the CI or the testing framework re-run the flaky tests. While this doesn’t fix the root cause, it does fix the “time leak” the flaky test is causing for the developer team.

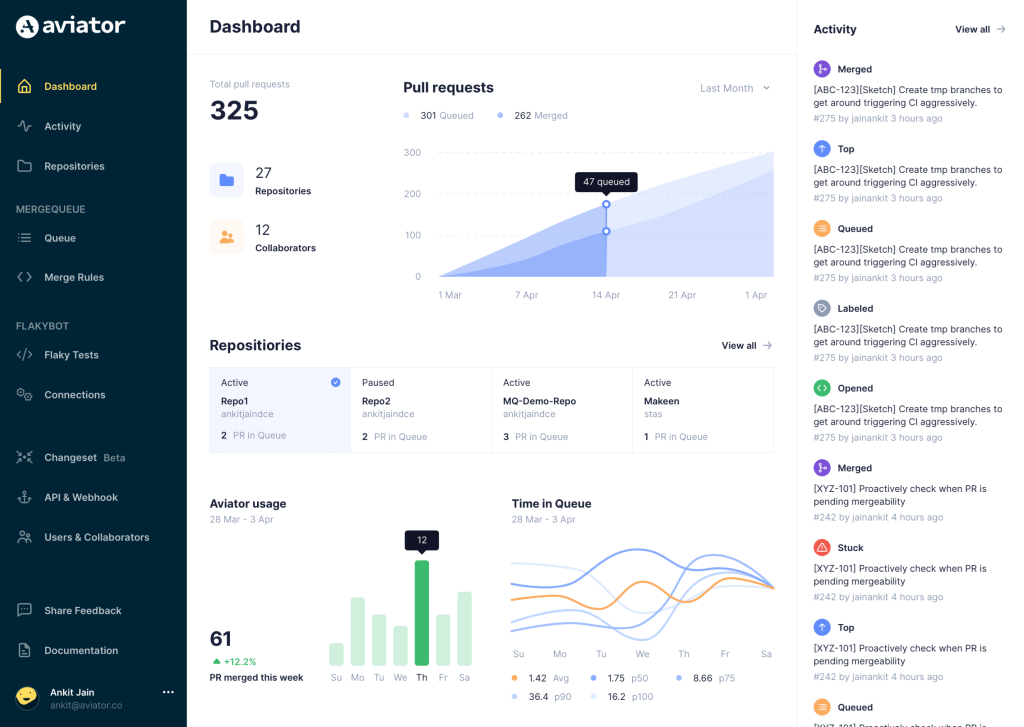

Aviator: Automate your cumbersome merge processes

Aviator automates tedious developer workflows by managing git Pull Requests (PRs) and continuous integration test (CI) runs to help your team avoid broken builds, streamline cumbersome merge processes, manage cross-PR dependencies, and handle flaky tests while maintaining their security compliance.

There are 4 key components to Aviator:

- MergeQueue – an automated queue that manages the merging workflow for your GitHub repository to help protect important branches from broken builds. The Aviator bot uses GitHub Labels to identify Pull Requests (PRs) that are ready to be merged, validates CI checks, processes semantic conflicts, and merges the PRs automatically.

- ChangeSets – workflows to synchronize validating and merging multiple PRs within the same repository or multiple repositories. Useful when your team often sees groups of related PRs that need to be merged together, or otherwise treated as a single broader unit of change.

- FlakyBot – a tool to automatically detect, take action on, and process results from flaky tests in your CI infrastructure.

- Stacked PRs CLI – a command line tool that helps developers manage cross-PR dependencies. This tool also automates syncing and merging of stacked PRs. Useful when your team wants to promote a culture of smaller, incremental PRs instead of large changes, or when your workflows involve keeping multiple, dependent PRs in sync.